Posts tagged “scaryvikingsysadmins”

-

LISA12 Miscellany

A collection of stuff that didn't fit anywhere else:

St Vidicon of Cathode. Only slightly spoiled by the disclaimer "Saint Vidicon and his story are the intellectual property of Christopher Stasheff."

A Vagrant box for OmniOS, the OpenSolaris distro I heard about at LISA.

A picture of Matt Simmons. When I took this, he was committing some PHP code and mumbling something like "All I gotta do is enable globals and everything'll be fine..."

- I finished the scavenger hunt and won the USENIX Dart of Truth:

-

Theologians

Where I'm going, you cannot come... "Theologians", WilcoAt 2.45am, I woke up because a) my phone was buzzing with a page from work, and b) the room was shaking. I was quite bagged, since I'd been up 'til 1 finishing yesterday's blog entry, and all I could think was "Huh...earthquake. How did Nagios know about this?" Since the building didn't seem to be falling, I went back to sleep. In the morning, I found out it was a magnitude 6.2 earthquake.

I was going to go to the presentation by the CBC on "What your CDN won't tell you" (initially read as "What your Canadian won't tell you": "Goddammit, it's prounced BOOT") but changed my mind at the last minute and went to the Cf3 "Guru is in" session with Diego Zamboni. (But not before accidentally going to the Cf3 tutorial room; I made an Indiana Jones-like escape as Mark Burgess was closing the door.) I'm glad I went; I got to ask what people are doing for testing, and got a some good hints.

Vagrant's good for testing (and also awesome in general). I'm trying to get a good routine set up for this, but I have not started using the Cf3 provider for Vagrant...because of crack? Not sure.

You might want to use different directories in your revision control; that makes it easy to designate dev, testing, and production machines (don't have to worry about getting different branches; just point them at the directories in your repo).

Make sure you can promote different branches in an automated way (merging branches, whatever). It's easy to screw this up, and it's worth taking the time to make it very, very easy to do it right.

If you've got a bundle meant to fix a problem, deliberately break a machine to make sure it actually does fix the problem.

Consider using git + gerrit + jenkins to test and review code.

The Cf3 sketch tool still looks neat. The Enterprise version looked cool, too; it was the first time I'd seen it demonstrated, and I was intrigued.

At the break I got drugs^Wcold medication from Jennifer. Then I sang to Matt:

(and the sailors say) MAAAA-AAAT you're a FIIINNE girl what a GOOOD WAAAF you would be but my life, my love and my LAY-ee-daaaay is the sea (DOOOO doo doo DOO DOO doot doooooooo)

I believe Ben has video; I'll see if it shows up.

BTW, Matt made me sing "Brandy" to him when I took this picture:

I discussed Yo Dawg Compliance with Ben ("Yo Dawg, I put an X in your Y so you could X when you Y"; == self-reference), and we decided to race each other to @YoDawgCompliance on Twitter. (Haha, I got @YoDawgCompliance2K. Suck it!)

(Incidentally, looking for a fully-YoDawg compliant ITIL implementation? Leverage @YoDawgCompliance2K thought leadership TODAY!)

Next up was the talk on the Greenfield HPC by @arksecond. I didn't know the term, and earlier in the week I'd pestered him for an explanation. Explanation follows: Greenfield is a term from the construction industry, and denotes a site devoid of any existing infrastructure, buildings, etc where one might do anything; Brownfield means a site where there is existing buildings, etc and you have to take those into account. Explanation ends. Back to the talk. Which was interesting.

They're budgeting 25 kW/rack, twice what we do. For cooling they use spot cooling, but they also were able to quickly prototype aisle containment with duct tape and cardboard. I laughed, but that's awesome: quick and easy, and it lets you play around and get it right. (The cardboard was replaced with plexiglass.)

Lunch was with Matt and Ken from FOO National Labs, then Sysad1138 and Scott. Regression was done, fun was had and phones were stolen.

The plenary! Geoff Halprin spoke about how DevOps has been done for a long time, isn't new and doesn't fix everything. Q from the audience: I work at MIT, and we turn out PhDs, not code; what of this applies to me? A: In one sense, not much; this is not as relevant to HPC, edu, etc; not everything looks like enterprise setups. But look at the techniques, underlying philosophy, etc and see what can be taken.

That's my summary, and the emphasis is prob. something he'd disagree with. But it's Friday as I write this and I am tired as I sit in the airport, bone tired and I want to be home. There are other summaries out there, but this one is mine.

-

Flair

Silly simple lies They made a human being out of you... "Flair", Josh RouseThursday I gave my Lightning Talk. I prepared for it by writing it out, then rehearsing a couple times in my room to get it down to five minutes. I think it helped, since I got in about two seconds under the wire. I think I did okay; I'll post it separately. Pic c/o Bob the Viking:

Some other interesting talks:

@perlstalker on his experience with Ceph (he's happy);

@chrisstpierre on why XML is good for (it's code with a built-in validator; don't use it for setting syslog levels);

the guy who wanted to use retired aircraft carriers as floating data centres;

Dustin on MozPool (think cloud for Panda Boards);

Stew (@digitalcrow) on Machination, his homegrown hierarchical config management tool (users can set their preferences; if needed for the rest of their group, it can be promoted up the hierarchy as needed);

Derek Balling on megacity.org/timeline (keep your fingers crossed!);

a Google dev on his experience bringing down GMail.

Afterward I went to the vendor booths again, and tried the RackSpace challenge: here's a VM and it's root password; it needs to do X, Y and Z. GO. I was told my time wasn't bad (8.5 mins; wasn't actually too hard), and I may actually win something. Had lunch with John again and discussed academia, fads in theoretical computer science and the like.

The afternoon talk on OmniOS was interesting; it's an Illumos version/distro with a rigourous update schedule. The presenter's company uses it in a LOT of machines, and their customers expect THEM to fix any problems/security problems...not say "Yeah, the vendor's patch is coming in a couple weeks." Stripped down; they only include about 110 packages (JEOS: "Just Enough Operating System") in the default install. "Holy wars" slide: they use IPS ("because ALL package managers suck") and vi (holler from audience: "Which one?"). They wrote their own installer: "If you've worked with OpenSolaris before, you know that it's actually pretty easy getting it to work versus fucking getting it on the disk in the first place."

At the break I met with Nick Anderson (@cmdln_) and Diego Zamboni (@zzamboni, author of "Learning Cfengine 3"). Very cool to meet them both, particularly as they did not knee me in the groin for my impertinence in criticising of Cf3 syntax. Very, very nice and generous folk.

The next talk, "NSA on the Cheap", was one I'd already heard from the USENIX conference in the summer (downloaded the MP3), so I ended up talking to Chris Allison. I met him in Baltimore on the last day, and it turns out he's Matt's coworker (and both work for David Blank-Edelman). And when he found out that Victor was there (we'd all gone out on our last night in Baltimore) he came along to meet him. We all met up, along with Victor's wife Jennifer, and caught up even more. (Sorry, I'm writing this on Friday; quality of writing taking a nosedive.)

And so but Victor, Jennifer and I went out to Banker's Hill, a restaurant close to the hotel. Very nice chipotle bacon meatloaf, some excellent beer, and great conversation and company. Retired back to the hotel and we both attended the .EDU BoF. Cool story: someone who's unable to put a firewall on his network (he's in a department, not central IT, so not an option for him) woke up one day to find his printer not only hacked, but the firmware running a proxy of PubMed to China ("Why is the data light blinking so much?"). Not only that, but he couldn't upgrade the firmware because the firmware reloading code had been overwritten.

Q: How do you know you're dealing with a Scary Viking Sysadmin?

A: Service monitoring is done via two ravens named Huginn and Muninn.

-

The White Trash Period Of My Life

Careful with words -- they are so meaningful Yet they scatter like the booze from our breath... "The White Trash Period Of My Life", Josh RouseI woke up at a reasonable time and went down to the lobby for free wireless; finished up yesterday's entry (2400 words!), posted and ate breakfast with Andy, Alf ("I went back to the Instagram hat store yesterday and bought the fedora. But now I want to accessorize it") and...Bob in full Viking drag.

Andy: "Now you...you look like a major in the Norwegian army."

Off to the Powershell tutorial. I've been telling people since that I like two things from Microsoft: the Natural Keyboard, and now Powershell. There are some very, very nice features in there:

common args/functions for each command, provided by the PS library

directory-like listings for lots of things (though apparently manipulating the registry through PS is sub-optimal); feels Unix/Plan 9-like

$error contains all the errors in your interactive cycle

"programming with hand grenades": because just 'bout everything in PS is an object, you can pass that along through a pipe and the receiving command explodes it and tries to do the right thing.

My notes are kind of scattered: I was trying to install version 3 (hey MS: please make this easier), and then I got distracted by something I had to do for work. But I also got to talk to Steve Murawski, the instructor, during the afternoon break, as we were both on the LOPSA booth. I think MS managed to derive a lot of advantage from being the last to show up at the party.

Interestingly, during the course I saw on Twitter that Samba 4 has finally been released. My jaw dropped. It looks like there are still some missing bits, but it can be an AD now. [Keanu voice] Whoah.

During the break I helped staff the LOPSA booth and hung out with a syadmin from NASA; one of her users is a scientist who gets data from the ChemCam (I think) on Curiosity. WAH.

The afternoon's course was on Ganeti, given by Tom Limoncelli and Guido Trotter. THAT is my project for next year: migrating my VMs, currently on one host, to Ganeti. It seems very, very cool. And on top of that, you can test it out in VirtualBox. I won't put in all my notes, since I'm writing this in a hurry (I always fall behind as the week goes on) and a lot of it is avail on the documentaion. But:

You avoid needing a SAN by letting it do DRBD on different pairs of nodes. Need to migrate a machine? Ganeti will pass it over to the other pair.

If you've got a pair of machines (which is about my scale), you've just gained failover of your VMs. If you've got more machines, you can declare a machine down (memory starts crapping out, PS failing, etc) and migrate the machines over to their alternate. When the machine's back up, Ganeti will do the necessary to get the machine back in the cluster (sync DRBDs, etc).

You can import already-existing VMs (Tom: "Thank God for summer interns.")

There's a master, but there are master candidates ready to take over if requested or if the master becomes unavailable.

There's a web manager to let users self-provision. There's also Synnefo, a AWS-like web FE that's commercialized as Okeanos.io (free trial: 3-hour lifetime VMs)

I talked with Scott afterward, and learned something I didn't know: NFS over GigE works fine for VM images. Turn on hard mounts (you want to know when something goes wrong), use TCP, use big block sizes, but it works just fine. This changes everything.

In the evening the bar was full and the hotel restaurant was definitely outside my per diem, so I took a cab downtown to the Tipsy Crow. Good food, nice beer, and great people watching. (Top tip for Canadians: here, the hipsters wear moustaches even when it's not Movember. Prepare now and get ahead of the curve.) Then back to the hotel for the BoFs. I missed Matt's on small infrastructure (damn) but did make the amateur astronomy BoF, which was quite cool. I ran into John Hewson, my roommate from the Baltimore LISA, and found out he's presenting tomorrow; I'll be there for that.

Q: How do you know you're with a Scary Viking Sysadmin?

A: Prefaces new cool thing he's about to show you with "So I learned about this at the last sysadmin Althing...."

-

Handshake Drugs

And if I ever was myself, I wasn't that night... "Handshake Drugs", WilcoWednesday was opening day: the stats (1000+ attendees) and the awards (the Powershell devs got one for "bringing the power of automated system administration to Windows, where it previously largely unsupported"). Then the keynote from Vint Cerf, co-designer of TCP and yeah. He went over a lot of things, but made it clear he was asking questions, not proposing answers. Many cool quotes, including: "TCP/IP runs over everything, including you if you're not paying attention." Discussed the recent ITU talks a lot, and what exactly he's worried about there. Grab the audio/watch the video.

Next talk was about a giant scan of the entire Internet (/0) for SIP servers. Partway through my phone rang and I had to take it, but by the time I got out to the hall it'd stopped and it turned out to be a wrong number anyway. Grr.

IPv6 numbering strategies was next. "How many hosts can you fit in a /48? ALL OF THEM." Align your netblocks by nibble boundaries (hex numbers); it makes visual recognition of demarcation so much easier. Don't worry about packing addresses, because there's lots of room and why complicate things? You don't want to be doing bitwise math in the middle of the night.

Lunch, and the vendor tent. But first an eye-wateringly expensive burrito -- tasty, but $9. It was NOT a $9-sized burrito. I talked to the CloudStack folks and the Ceph folks, and got cool stuff from each. Both look very cool, and I'm going to have to look into them more when I get home. Boxer shorts from the Zenoss folks ("We figured everyone had enough t-shirts").

I got to buttonhole Mark Burgess, tell him how much I'm grateful for what he's done but OMG would he please do something about the mess of brackets. Like the Wordpress sketch:

commands: !wordpress_tarball_is_present:: "/usr/bin/wget -q -O $($(params)[_tarfile]) $($(params)[_downloadurl])" comment => "Downloading latest version of WordPress.";His response, as previously, was "Don't do that, then." To be fair, I didn't have this example and was trying to describe it verbally ("You know, dollar bracket dollar bracket variable square bracket...C'mon, I tweeted about it in January!"). And he agreed yes, it's a problem, but it's in the language now, and indirection is a problem no matter what. All of which is true, and I realize it's easy for me to propose work for other people without coming up with patches. And I let him know that this was a minor nit, that I really was grateful for Cf3. So there.

I got to ask Dru Lavigne about FreeBSD's support for ZFS (same as Illumos) and her opinion of DragonflyBSD (neat, thinks of it as meant for big data rather than desktops, "but maybe I'm just old and crotchety").

I Talked with a PhD student who was there to present a paper. He said it was an accident he'd done this; he's not a sysadmin, and though his nominal field is CS, he's much more interested in improving the teaching of undergraduate students. ("The joke is that primary/secondary school teachers know all about teaching and not so much about the subject matter, and at university it's the other way around."). In CompSci it's all about the conferences -- that's where/how you present new work, not journals (Science, Nature) like the natural sciences. What's more, the prestigious conferences are the theoretical ones run by the ACM and the IEEE, not a practical/professional one like LISA. "My colleagues think I'm slumming."

Off to the talks! First one was a practice and experience report on the config and management of a crapton (700) iPads for students at an Australian university. The iPads belonged to the students -- so whatever profile was set up had to be removable when the course was over, and locking down permanently was not an option.

No suitable tools for them -- so they wrote their own. ("That's the way it is in education.") Started with Django, which the presenter said should be part of any sysadmin's toolset; easy to use, management interface for free. They configured one iPad, copied the configuration off, de-specified it with some judicious search and replace, and then prepared it for templating in Django. To install it on the iPad, the students would connect to an open wireless network, auth to the web app (which was connected to the university LDAP), and the iPad would prompt them to install the profile.

The open network was chosen because the secure network would require a password....which the iPad didn't have yet. And the settings file required an open password in it for the secure wireless to work. The reviewers commented on this a lot, but it was a conscious decision: setting up the iPad was one of ten tasks done on their second day, and a relatively technical one. And these were foreign students, so language comprehension was a problem. In the end, they felt it was a reasonable risk.

John Hewson was up next, talking about ConfSolve, his declarative configuration language connected to/written with a constraint solver. ("Just cross this red wire with this blue wire...") John was my roommate at the Baltimore LISA, and it was neat to see what he's been working on. Basically, you can say things like "I want this VM to have 500 GB of disk" and ConfSolve will be all like, "Fuck you, you only have 200 GB of storage left". You can also express hard limits and soft preferences ("Maximize memory use. It'd be great if you could minimise disk space as well, but just do your best"). This lets you do things like cloudbursting: "Please keep my VMs here unless things start to suck, in which case move my web, MySQL and DNS to AWS and leave behind my SMTP/IMAP."

After his presentation I went off to grab lunch, then back to the LISA game show. It was surprisingly fun and funny. And then, Matt and I went to the San Diego Maritime Museum, which was incredibly awesome. We walked through The Star of India, a huge three-masted cargo ship that still goes out and sails. There were actors there doing Living History (you could hear the caps) with kids, and displays/dioramas to look at. And then we met one of the actors who told us about the ship, the friggin' ENORMOUS sails that make it go (no motor), and about being the Master at Arms in the movie "Master and Commander". Which was our cue to head over to the HMS Surprise, used in the filming thereof. It's a replica, but accurate and really, really neat to see. Not nearly as big as the Star of India, and so many ropes...so very, very many ropes. And after that we went to a Soviet (!) Foxtrot-class submarine, where we had to climb through four circular hatches, each about a metre in diameter. You know how they say life in a submarine is claustrophobic? Yeah, they're not kidding. Amazing, and I can't recommend it enough.

We walked back to the hotel, got some food and beer, and headed off to the LOPSA annual meeting. I did not win a prize. Talked with Peter from the University of Alberta about the lightning talk I promised to do the next day about reproducible science. And thence to bed.

Q: How do you know you're with a Scary Viking Sysadmin?

A: When describing multiple roles at the office, says "My other hat is made of handforged steel."

-

Christmas With Jesus

And my conscience has it stripped down to science Why does everything displease me? Still, I'm trying... "Christmas with Jesus", Josh RouseAt 3am my phone went off with a page from $WORK. It was benign, but do you think I could get back to sleep? Could I bollocks. I gave up at 5am and came down to the hotel lobby (where the wireless does NOT cost $11/day for 512 Kb/s, or $15 for 3Mb/s) to get some work done and email my family. The music volume was set to 11, and after I heard the covers of "Living Thing" (Beautiful South) and "Stop Me If You Think That You've Heard This One Before" (Marc Ronson; disco) I retreated back to my hotel room to sit on my balcony and watch the airplanes. The airport is right by both the hotel and the downtown, so when you're flying in you get this amazing view of the buildings OH CRAP RIGHT THERE; from my balcony I can hear them coming in but not see them. But I can see the ones that are, I guess, flying to Japan; they go straight up, slowly, and the contrail against the morning twilight looks like rockets ascending to space. Sigh.

Abluted (ablated? hm...) and then down to the conference lounge to stock up on muffins and have conversations. I talked to the guy giving the .EDU workshop ("What we've found is that we didn't need a bachelor's degree in LDAP and iptables"), and with someone else about kids these days ("We had a rich heritage of naming schemes. Do you think they're going to name their desktop after Lord of the Rings?" "Naw, it's all gonna be Twilight and Glee.")

Which brought up another story of network debugging. After an organizational merger, network problems persisted until someone figured out that each network had its own DNS servers that had inconsistent views. To make matters worse, one set was named Kirk and Picard, and the other was named Gandalf and Frodo. Our Hero knew then what to do, and in the post-mortem Root Cause Diagnosis, Executive Summary, wrote "Genre Mismatch." [rimshot]

(6.48 am and the sun is rising right this moment. The earth, she is a beautiful place.)

And but so on to the HPC workshop, which intimidated me. I felt unprepared. I felt too small, too newbieish to be there. And when the guy from fucking Oak Ridge got up and said sheepishly, "I'm probably running one of the smaller clusters here," I cringed. But I needn't have worried. For one, maybe 1/3rd of the people introduced themselves as having small clusters (smallest I heard was 10 nodes, 120 cores), or being newbies, or both. For two, the host/moderator/glorious leader was truly excellent, in the best possible Bill and Ted sense, and made time for everyone's questions. For three, the participants were also generous with time and knowledge, and whether I asked questions or just sat back and listened, I learned so much.

Participants: Oak Ridge, Los Alamos, a lot of universities, and a financial trading firm that does a lot of modelling and some really interesting, regulatory-driven filesystem characteristics: nothing can be deleted for 7 years. So if someone's job blows up and it litters the filesystem with crap, you can't remove the files. Sure, they're only 10-100 MB each, but with a million jobs a day that adds up. You can archive...but if the SEC shows up asking for files, they need to have them within four hours.

The guy from Oak Ridge runs at least one of his clusters diskless: less moving parts to fail. Everything gets saved to Lustre. This became a requirement when, in an earlier cluster, a node failed and it had Very Important Data on a local scratch disk, and it took a long time to recover. The PI (==principal investigator, for those not from an .EDU; prof/faculty member/etc who leads a lab) said, "I want to be able to walk into your server room, fire a shotgun at a random node, and have it back within 20 minutes." So, diskless. (He's also lucky because he gets biweekly maintenance windows. Another admin announces his quarterly outages a year in advance.)

There were a lot of people who ran configuration management (Cf3, Puppet, etc) on their compute nodes, which surprised me. I've thought about doing that, but assumed I'd be stealing precious CPU cycles from the science. Overwhelming response: Meh, they'll never notice. OTOH, using more than one management tool is going to cause admin confusion or state flapping, and you don't want to do that.

One guy said (both about this and the question of what installer to use), "Why are you using anything but Rocks? It's federally funded, so you've already paid for it. It works and it gets you a working cluster quickly. You should use it unless you have a good reason not to." "I think I can address that..." (laughter) Answer: inconsistency with installations; not all RPMs get installed when you're doing 700 nodes at once, so he uses Rocks for a bare-ish install and Cf3 after that -- a lot like I do with Cobbler for servers. And FAI was mentioned too, which apparently has support for CentOS now.

One .EDU admin gloms all his lab's desktops into the cluster, and uses Condor to tie it all together. "If it's idle, it's part of the cluster." No head node, jobs can be submitted from anywhere, and the dev environment matches the run environment. There's a wide mix of hardware,so part of user education a) is getting people to specify minimal CPU and memory requirements and b) letting them know that the ideal job is 2 hours long. (Actually, there were a lot of people who talked about high-turnover jobs like that, which is different from what I expected; I always thought of HPC as letting your cluster go to town for 3 weeks on something. Perhaps that's a function of my lab's work, or having a smaller cluster.)

User education was something that came up over and over again: telling people how to efficiently use the cluster, how to tweak settings (and then vetting jobs with scripts).

I asked about how people learned about HPC; there's not nearly the wealth of resources that there are for programming, sysadmin, networking, etc. Answer: yep, it's pretty quiet out there. Mailing lists tend to be product-specific (though are pretty excellent), vendor training is always good if you can get it, but generally you need to look around a lot. ACM has started a SIG for HPC.

I asked about checkpointing, which was something I've been very fuzzy about. Here's the skinny:

Checkpointing is freezing the process so that you can resurrect it later. It protects against node failures (maybe with automatic moving of the process/job to another node if one goes down) and outages (maybe caused by maintenance windows.)

Checkpointing can be done at a few different layers:

- the app itself

- the scheduler (Condor can do this; Torque can't)

- the OS (BLCR for Linux, but see below)

- or just suspending a VM and moving it around; I was unclear how ``` many people did this.

* The easiest and best by far is for the app to do it. It knows its state intimately and is in the best position to do this. However, the app needs to support this. Not necessary to have it explicitly save the process (as in, kernel-resident memory image, registers, etc); if it can look at logs or something and say "Oh, I'm 3/4 done", then that's good too. * The Condor scheduler supports this, *but* you have to do this by linking in its special libraries when you compile your program. And none of the big vendors do this (Matlab, Mathematica, etc). * BLCR: "It's 90% working, but the 10% will kill you." Segfaults, restarts only work 2/3 of the time, etc. Open-source project from a federal lab and until very recently not funded -- so the response to "There's this bug..." was "Yeah, we're not funded. Can't do nothing for you." Funding has been obtained recently, so keep your fingers crossed. One admin had problems with his nodes: random slowdowns, not caused by cstates or the other usual suspects. It's a BIOS problem of some sort and they're working it out with the vendor, but in the meantime the only way around it is to pull the affected node and let the power drain completely. This was pointed out by a user ("Hey, why is my job suddenly taking so long?") who was clever enough to write a dirt-simple 10 million iteration for-loop that very, very obviously took a lot longer on the affected node than the others. At this point I asked if people were doing regular benchmarking on their clusters to pick up problems like this. Answer: no. They'll do benchmarking on their cluster when it's stood up so they have something to compare it to later, but users will unfailingly tell them if something's slow. I asked about HPL; my impression when setting up the cluster was, yes, benchmark your own stuff, but benchmark HPL too 'cos that's what you do with a cluster. This brought up a host of problems for me, like compiling it and figuring out the best parameters for it. Answers: * Yes, HPL is a bear. Oak Ridge: "We've got someone for that and that's all he does." (Response: "That's your answer for everything at Oak Ridge.") * Fiddle with the params P, Q and N, and leave the rest alone. You can predict the FLOPS you should get on your hardware, and if you get 90% or so within that you're fine. * HPL is not that relevant for most people, and if you tune your cluster for linear algebra (which is what HPL does) you may get crappy performance on your real work. * You can benchmark it if you want (and download Intel's binary if you do; FIXME: add link), but it's probably better and easier to stick to your own apps. Random: * There's a significant number of clusters that expose interactive sessions to users via qlogin; that had not occurred to me. * Recommended tools: * ubmod: accounting graphs * Healthcheck scripts (Werewolf) * stress: cluster stress test tool * munin: to collect arbitrary info from a machine * collectl: good for ie millisecond resolution of traffic spikes * "So if a box gets knocked over -- and this is just anecdotal -- my experience is that the user that logs back in first is the one who caused it." * A lot of the discussion was prompted by questions like "Is anyone else doing X?" or "How many people here are doing Y?" Very helpful. * If you have to return warranty-covered disks to the vendor but you really don't want the data to go, see if they'll accept the metal cover of the disk. You get to keep the spinning rust. * A lot of talk about OOM-killing in the bad old days ("I can't tell you how many times it took out init."). One guy insisted it's a lot better now (3.x series). * "The question of changing schedulers comes up in my group every six months." * "What are you doing for log analysis?" "We log to /dev/null." (laughter) "No, really, we send syslog to /dev/null." * Splunk is eye-wateringly expensive: 1.5 TB data/day =~ $1-2 million annual license. * On how much disk space Oak Ridge has: "It's...I dunno, 12 or 13 PB? It's 33 tons of disks, that's what I remember." * Cheap and cheerful NFS: OpenSolaris or FreeBSD running ZFS. For extra points, use an Aztec Zeus for a ZIL: a battery-backed 8GB DIMM that dumps to a compact flash card if the power goes out. * Some people monitor not just for overutilization, but for underutilization: it's a chance for user education ("You're paying for my time and the hardware; let me help you get the best value for that"). For Oak Ridge, though, there's less pressure for that: scientists get billed no matter what. * "We used to blame the network when there were problems. Now their app relies on SQL Server and we blame that." * Sweeping for expired data is important. If it's scratch, then *treat* it as such: negotiate expiry dates and sweep regularly. * Celebrity resemblances: Michael Moore and the guy from Dead Poet's Society/The Good Wife. (Those are two different sysadmins, btw.) * Asked about my .TK file problem; no insight. Take it to the lists. (Don't think I've written about this, and I should.) * On why one lab couldn't get Vendor X to supply DKMS kernel modules for their hardware: "We're three orders of magnitude away from their biggest customer. We have *no* influence." * Another vote for SoftwareCarpentry.org as a way to get people up to speed on Linux. * A lot of people encountered problems upgrading to Torque 4.x and rolled back to 2.5. "The source code is disgusting. Have you ever looked at it? There's 15 years of cruft in there. The devs acknowledged the problem and announced they were going to be taking steps to fix things. One step: they're migrating to C++. [Kif sigh]" * "Has anyone here used Moab Web Services? It's as scary as it sounds. Tomcat...yeah, I'll stop there." "You've turned the web into RPC. Again." * "We don't have regulatory issues, but we do have a physicist/geologist issue." * 1/3 of the Top 500 use SLURM as a scheduler. Slurm's srun =~ Torque's pdbsh; I have the impression it does not use MPI (well, okay, neither does Torque, but a lot of people use Torque + mpirun), but I really need to do more reading. * lmod (FIXME: add link) is a Environment Modules-compatible (works with old module files) replacement that fixes some problems with old EM, actively developed, written in lua. * People have had lots of bad experiences with external Fermi GPU boxes from Dell, particularly when attached to non-Dell equipment. * Puppet has git hooks that let you pull out a particular branch on a node. And finally: Q: How do you know you're with a Scary Viking Sysadmin? A: They ask for Thor's Skullsplitter Mead at the Google Bof. -

Hotel Arizona

Hotel in Arizona made us all wanna feel like stars... "Hotel Arizona", WilcoSunday morning I was down in the lobby at 7.15am, drinking coffee purchased with my $5 gift certificate from the hotel for passing up housekeeping ("Sheraton Hotels Green Initiative"). I registered for the conference, came back to my hotel room to write some more, then back downstairs to wait for my tutorial on Amazon Web Services from Bill LeFebvre (former LISA chair and author of top(1)) and Marc Chianti. It was pretty damned awesome: an all-day course that introduced us to AWS and the many, many services they offer. For reasons that vary from budgeting to legal we're unlikely to move anything to AWS at $WORK, but it was very, very enlightening to learn more about it. Like:

Amazon lights up four new racks a day, just keeping up with increased demand.

Their RDS service (DB inna box) will set up replication automagically AND apply patches during configurable regular downtime. WAH.

vmstat(1) will, for a VM, show CPU cycles stolen by/for other VMs in the ST column

Amazon will not really guarantee CPU specs, which makes sense (you're on guest on a host of 20 VMs, many hardware generations, etc). One customer they know will spin up a new instance and immediately benchmark it to see if performance is acceptable; if not, they'll destroy it and try again.

Netflix, one of AWS' biggest customers, does not use EBS (persistent) storage for its instances. If there's an EBS problem -- and this probably happens a few times a year -- they keep trucking.

It's quite hard to "burst into the cloud" -- to use your own data centre most of the time, then move stuff to AWS at Xmas, when you're Slashdotted, etc. The problem is: where's your load balancer? And how do you make that available no matter what?

One question I asked: How would you scale up an email service? 'Cos for that, you don't only need CPU power, but (say) expanded disk space, and that shared across instances. A: Either do something like GlusterFS on instances to share FS, or just stick everything in RDS (AWS' MySWL service) and let them take care of it.

The instructors know their stuff and taught it well. If you have the chance, I highly recommend it.

Lunch/Breaks:

Met someone from Mozilla who told me that they'd just decommissioned the last of their community mirrors in favour of CDNs -- less downtime. They're using AWS for a new set of sites they need in Brazil, rather than opening up a new data centre or some such.

Met someone from a flash sale site: they do sales every day at noon, when they'll get a million visitors in an hour, and then it's quiet for the next 23 hours. They don't use AWS -- they've got enough capacity in their data centre for this, and they recently dropped another cloud provider (not AWS) because they couldn't get the raw/root/hypervisor-level performance metrics they wanted.

Saw members of (I think) this show choir wearing spangly skirts and carrying two duffel bags over each shoulder, getting ready to head into one of the ballrooms for a performance at a charity lunch.

Met a sysadmin from a US government/educational lab, talking about fun new legal constraints: to keep running the lab, the gov't required not a university but a LLC. For SLAC, that required a new entity called SLAC National Lab, because Stanford was already trademarked and you can't delegate a trademark like you can DNS zones. And, it turns out, we're not the only .edu getting fuck-off prices from Oracle. No surprise, but still reassuring.

I saw Matt get tapped on the shoulder by one of the LISA organizers and taken aside. When he came back to the table he was wearing a rubber Nixon mask and carrying a large clanking duffel bag. I asked him what was happening and he said to shut up. I cried, and he slapped me, then told me he loved me, that it was just one last job and it would make everything right. (In the spirit of logrolling, here he is scoping out bank guards:

Where does the close bracket go?)

After that, I ran into my roommate from the Baltimore LISA in 2009 (check my tshirt...yep, 2009). Very good to see him. Then someone pointed out that I could get free toothpaste at the concierge desk, and I was all like, free toothpaste?

And then who should come in but Andy Seely, Tampa Bay homeboy and LISA Organizing Committee member. We went out for beer and supper at Karl Strauss (tl;dr: AWESOME stout). Discussed fatherhood, the ageing process, free-range parenting in a hanger full of B-52s, and just how beer is made. He got the hang of it eventually:

I bought beer for my wife, he took a picture of me to show his wife, and he shared his toothpaste by putting it on a microbrewery coaster so I didn't have to pay $7 for a tube at the hotel store, 'cos the concierge was out of toothpaste. It's not a euphemism.

Q: How do you know you're with a Scary Viking Sysadmin?

A: They insist on hard drive destruction via longboat funeral pyre.

-

(nothinsevergonnagetinmyway) Again

Wasted days, wasted nights Try to downplay being uptight... -- "(nothinsevergonnastandinmyway) Again", WilcoSaturday I headed out the door at 5.30am -- just like I was going into work early. I'd been up late the night before finishing up "Zone One" by Colson Whitehead, which ZOMG is incredible and you should read, but I did not want to read while alone and feeling discombobulated in a hotel room far from home. Cab to the airport, and I was suprised to find I didn't even have to opt out; the L3 scanners were only being used irregularly. I noticed the hospital curtains set up for the private screening area; it looked a bit like God's own shower curtain.

The customs guard asked me where I was going, and whether I liked my job. "That's important, you know?" Young, a shaved head and a friendly manner. Confidential look left, right, then back at me. "My last job? I knew when it was time to leave that one. You have a good trip."

The gate for the airline I took was way out on a side wing of the airport, which I can only assume meant that airline lost a coin toss or something. The flight to Seattle was quick and low, so it wasn't until the flight to San Diego that a) we climbed up to our cruising altitude of $(echo "39000/3.3" | bc) 11818 meters and b) my ears started to hurt. I've got a cold and thought that my aggressive taking of cold medication would help, but no. The first seatmate had a shaved head, a Howie Mandel soul patch, a Toki watch and read "Road and Track" magazine, staring at the ads for mag wheels; the other seatmate announced that he was in the Navy, going to his last command, and was going to use the seat tray as a headrest as soon as they got to cruising. "I was up late last night, you know?" I ate my Ranch Corn Nuggets (seriously).

Once at the hotel, I ran into Bob the Norwegian, who berated me for being surprised that he was there. "I've TOLD you this over and over again!" Not only that, but he was there with three fellow Norwegian sysadmins, including his minion. I immediately started composing Scary Viking Sysadmin questions in my head; you may begin to look forward to them.

We went out to the Gaslamp district of San Diego, which reminds me a lot of Gastown in Vancouver; very familiar feel, and a similar arc to its history. Alf the Norwegian wanted a hat for cosplay, so we hit two -- TWO -- hat stores. The second resembled nothing so much as a souvenir shop in a tourist town, but the first was staffed by two hipsters looking like they'd stepped straight out of Instagram:

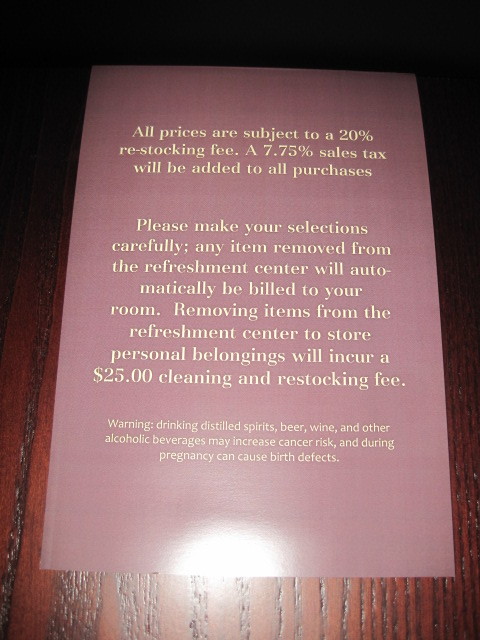

They sold $160 Panama hats. I very carefully stayed away from the merchandise. Oh -- and this is unrelated -- from the minibar in my hotel room:

We had dinner at a restaurant whose name I forget; stylish kind of place, with ten staff members (four of whom announced, separately, that they would be our server for the night). They seemed disappointed when I ordered a Wipeout IPA ("Yeah, we're really known more for our Sangria"), but Bob made up for it by ordering a Hawaiian Hoo-Hoo:

We watched the bar crawlers getting out of cabs dressed in Sexy Santa costumes ("The 12 Bars of Xmas Pub Crawl 2012") and discussed Agile Programming (which phrase, when embedded in a long string of Norwegian, sounds a lot like "Anger Management".)

Q: How do you know you're with a Scary Viking Sysadmin?

A: They explain the difference between a fjord and a fjell in terms of IPv6 connectivity.

There was also this truck in the streets, showing the good folks of San Diego just what they were missing by not being at home watching Fox Sports:

We headed back to the hotel, and Bob and I waited for Matt to show up. Eventually he did, with Ben Cotton in tow (never met him before -- nice guy, gives Matt as much crap as I do -> GOOD) and Matt regaled us with tales of his hotel room:

Matt: So -- I don't wanna sound special or anything -- but is your room on the 7th floor overlooking the pool and the marina with a great big king-sized bed? 'Cos mine is.

Me: Go on.

Matt: I asked the guy at the desk when I was checking in if I could get a king-size bed instead of a double --

Me: "Hi, I'm Matt Simmons. You may know me from Standalone Hyphen Sysadmin Dot Com?"

Ben: "I'm kind of a big deal on the Internet."

Matt: -- and he says sure, but we're gonna charge you a lot more if you trash it.

Not Matt's balcony:

(UPDATE: Matt read this and said "Actually, I'm on the 9th floor? Not the 7th." saintaardvarkthecarpeted.com regrets the error.)

I tweeted from the bar using my laptop ("It's an old AOLPhone prototype"). It was all good.

-

Can't Face Up

How many times have I tried Just to get away from you, and you reel me back? How many times have I lied That there's nothing that I can do?-- SloanFriday morning started with a quick look at Telemundo ("PRoxima: Esclavas del sexo!"), then a walk to Phillz coffee. This time I got the Tesora blend (their hallmark) and wow, that's good coffee. Passed a woman woman pulling two tiny dogs across the street: "C'mon, peeps!" Back at the tables I checked my email and got an amazing bit of spam about puppies, and how I could buy some rare breeds for ch33p.

First up was the Dreamworks talk. But before that, I have to relate something.

Earlier in the week I ran into Sean Kamath, who was giving the talk, and told him it looked interesting and that I'd be sure to be there. "Hah," he said, "Wanna bet? Tom Limoncelli's talk is opposite mine, and EVERYONE goes to a Tom Limoncelli talk. There's gonna be no one at mine."

Then yesterday I happened to be sitting next to Tom during a break, and he was discussing attendance at the different presentations. "Mine's tomorrow, and no one's going to be there." "Why not?" "Mine's opposite the Dreamworks talk, and EVERYONE goes to Dreamworks talks."

Both were quite amused -- and possibly a little relieved -- to learn what the other thought.

But back at the ranch: FIXME in 2008, Sean gave a talk on Dreamworks and someone asked afterward "So why do you use NFS anyway?" This talk was meant to answer that.

So, why? Two reasons:

- Because it works.

They use lots of local caching (their filers come from NetApp, and they also have a caching box), a global namespace, data hierarchy (varying on the scales of fast, reliable and expensive), leverage the automounter to the max, and 10GB core links everywhere, and it works.

- What else are you gonna use? Hm?

FTP/rcp/rdist? Nope. SSH? Won't handle the load. AFS lacks commercial support -- and it's hard to get the head of a billion-dollar business to buy into anything without commercial support.

They cache for two reasons: global availability and scalability. First, people in different locations -- like on different sides of the planet (oh, what an age we live in!) -- need access to the same files. (Most data has location affinity, but this will not necessarily be true in the future.) Geographical distribution and the speed of light do cause some problems: while Data reads and gettatr() are helped a lot by the caches, first open, sync()s and writes are slow when the file is in India and it's being opened in Redwood. They're thinking about improvements to the UI to indicate what's happening to reduce user frustration. But overall, it works and works well.

Scalability is just as important: thousands of machines hitting the same filter will melt it, and the way scenes are rendered, you will have just that situation. Yes, it adds latency, but it's still faster than an overloaded filer. (It also requires awareness of close-to-open consistency.)

Automounter abuse is rampant at DW; If one filer is overloaded, they move some data somewhere else and change the automount maps. (They're grateful for the automounter version in RHEL 5: it no longer requires that the node be rebooted to reload the maps.) But like everything else it requires a good plan, or it gets confusing quickly.

Oh, and quick bit of trivia: they're currently sourcing workstations with 96GB of RAM.

One thing he talked about was that there are two ways to do sysadmin: rule-enforcing and policy-driven ("No!") or creative, flexible approaches to helping people get their work done. The first is boring; the second is exciting. But it does require careful attention to customers' needs.

So for example: the latest film DW released was "Mastermind". This project was given a quota of 85 TB of storage; they finished the project with 75 TB in use. Great! But that doesn't account for 35 TB of global temp space that they used.

When global temp space was first brought up, the admins said, "So let me be clear: this is non-critical and non-backed up. Is that okay with you?" "Oh sure, great, fine." So the admins bought cheap-and-cheerful SATA storage: not fast, not reliable, but man it's cheap.

Only it turns out that non-backed up != non-critical. See, the artists discovered that this space was incredibly handy during rendering of crowds. And since space was only needed overnight, say, the space used could balloon up and down without causing any long-term problems. The admins discovered this when the storage went down for some reason, and the artists began to cry -- a day or two of production was lost because the storage had become important to one side without the other realizing it.

So the admins fixed things and moved on, because the artists need to get things done. That's why he's there. And if he does his job well, the artists can do wonderful things. He described watching "Madegascar", and seeing the crowd scenes -- the ones the admins and artists had sweated over. And they were good. But the rendering of the water in other scenes was amazing -- it blew him away, it was so realistic. And the artists had never even mentioned that; they'd just made magic.

Understand that your users are going to use your infrastructure in ways you never thought possible; what matters is what gets put on the screen.

Challenges remain:

Sometimes data really does need to be at another site, and caching doesn't always prevent problems. And problems in a data render farm (which is using all this data) tend to break everything else.

Much needs to be automated: provisioning, re-provisioning and allocating storage is mostly done by hand.

Disk utilization is hard to get in real time with > 4 PB of storage world wide; it can take 12 hours to get a report on usage by department on 75 TB, and that doesn't make the project managers happy. Maybe you need a team for that...or maybe you're too busy recovering from knocking over the filer by walking 75 TB of data to get usage by department.

Notifications need to be improved. He'd love to go from "Hey, a render farm just fell over!" to "Hey, a render farm's about to fall over!"

They still need configuration management. They have a homegrown one that's working so far. QOTD: "You can't believe how far you can get with duct tape and baling wire and twine and epoxy and post-it notes and Lego and...we've abused the crap out of free tools."

I went up afterwards and congratulated him on a good talk; his passion really came through, and it was amazing to me that a place as big as DW uses the same tools I do, even if it is on a much larger scale.

I highly recommend watching his talk (FIXME: slides only for now. Do it now; I'll be here when you get back.

During the break I got to meet Ben Rockwood at last. I've followed his blog for a long time, and it was a pleasure to talk with him. We chatted about Ruby on Rails, Twitter starting out on Joyent, upcoming changes in Illumos now that they've got everyone from Sun but Jonathan Schwarz (no details except to expect awesome and a renewed focus on servers, not desktops), the joke that Joyent should just come out with it and call itself "Sun". Oh, and Joyent has an office in Vancouver. Ben, next time you're up drop me a line!

Next up: Twitter. 165 million users, 90 million tweets per day, 1000 tweets per second....unless the Lakers win, in which case it peaks at 3085 tweets per second. (They really do get TPS reports.) 75% of those are by API -- not the website. And that percentage is increasing.

Lessons learned:

Nothing works the first time; scale using the best available tech and plan to build everything more than once.

(Cron + ntp) x many machines == enough load on, say, the central syslog collector to cause micro outages across the site. (Oh, and speaking of logging: don't forget that syslog truncates messages > MTU of packet.)

RRDtool isn't good for them, because by the time you want to fiugure out what that one minute outage was about two weeks ago, RRDtool has averaged away the data. (At this point Toby Oetiker, a few seats down from me, said something I didn't catch. Dang.)

Ops mantra: find the weakest link; fix; repeat. OPS stats: MTTD (mean time to detect problem) and MTTR (MT to recover from problem).

It may be more important to fix the problem and get things going again than to have a post-mortem right away.

At this scale, at this time, system administration turns into a large programming project (because all your info is in your config. mgt tool, correct?). They use Puppet + hundreds of Puppet modules + SVN + post-commit hooks to ensure code reviews.

Occasionally someone will make a local change, then change permissions so that Puppet won't change it. This has led to a sysadmin mantra at Twitter: "You can't chattr +i with broken fingers."

Curve fitting and other basic statistical tools can really help -- they were able to predict the Twitpocalypse (first tweet ID > 2^32) to within a few hours.

Decomposition is important to resiliency. Take your app and break it into n different independant, non-interlocked services. Put each of them on a farm of 20 machines, and now you no longer care if a machine that does X fails; it's not the machine that does X.

Because of this Nagios was not a good fit for them; they don't want to be alerted about every single problem, they want to know when 20% of the machines that do X are down.

Config management + LDAP for users and machines at an early, early stage made a huge difference in ease of management. But this was a big culture change, and management support was important.

And then...lunch with Victor and his sister. We found Good Karma, which had really, really good vegan food. I'm definitely a meatatarian, but this was very tasty stuff. And they've got good beer on tap; I finally got to try Pliny the Elder, and now I know why everyone tries to clone it.

Victor talked about one of the good things about config mgt for him: yes, he's got a smaller number of machines, but when he wants to set up a new VM to test something or other, he can get that many more tests done because he's not setting up the machine by hand each time. I hadn't thought of this advantage before.

After that came the Facebook talk. I paid a little less attention to this, because it was the third ZOMG-they're-big talk I'd been to today. But there were some interesting bits:

Everyone talks about avoiding hardware as a single point of failure, but software is a single point of failure too. Don't compound things by pushing errors upstream.

During the question period I asked them if it would be totally crazy to try different versions of software -- something like the security papers I've seen that push web pages through two different VMs to see if any differences emerge (though I didn't put it nearly so well). Answer: we push lots of small changes all the time for other reasons (problems emerge quickly, so easier to track down), so in a way we do that already (because of staged pushes).

Because we've decided to move fast, it's inevitable that problems will emerge. But you need to learn from those problems. The Facebook outage was an example of that.

Always do a post-mortem when problems emerge, and if you focus on learning rather than blame you'll get a lot more information, engagement and good work out of everyone. (And maybe the lesson will be that no one was clearly designated as responsible for X, and that needs to happen now.)

The final speech of the conference was David Blank-Edelman's keynote on the resemblance between superheroes and sysadmins. I watched for a while and then left. I think I can probably skip closing keynotes in the future.

And then....that was it. I said goodbye to Bob the Norwegian and Claudio, then I went back to my room and rested. I should have slept but I didn't; too bad, 'cos I was exhausted. After a while I went out and wandered around San Jose for an hour to see what I could see. There was the hipster cocktail bar called "Cantini's" or something; billiards, flood pants, cocktails, and the sign on the door saying "No tags -- no colours -- this is a NEUTRAL ZONE."

I didn't go there; I went to a generic looking restaurant with room at the bar. I got a beer and a burger, and went back to the hotel.

-

Anyone who's anyone

I missed my chance, but I think I'm gonna get another... -- SloanThursday morning brought Brendan Gregg's (nee Sun, then Oracle, and now Joyent) talk about data visualization. He introduced himself as the shouting guy, and talked about how heat maps allowed him so see what the video demonstrated in a much more intuitive way. But in turn, these require accurate measurement and quantification of performance: not just "I/O sucks" but "the whole op takes 10 ms, 1 of which is CPU and 9 of which is latency."

Some assumptions to avoid when dealing with metrics:

The available metrics are correctly implemented. Are you sure there's not a kernel bug in how something is measured? He's come across them.

The available metrics are designed by performance experts. Mostly, they're kernel developers who were trying to debug their work, and found that their tool shipped.

The available metrics are complete. Unless you're using DTrace, you simply won't always find what you're looking for.

He's not a big fan of using IOPS to measure performance. There are a lot of questions when you start talking about IOPS. Like what layer?

- app

- library

- sync call

- VFS

- filesystem

- RAID

- device

(He didn't add political and financial, but I think that would have been funny.)

Once you've got a number, what's good or bad? The number can change radically depending on things like library/filesystem prefetching or readahead (IOPS inflation), read caching or write cancellation (deflation), the size of a read (he had an example demonstrating how measured capacity/busy-ness changes depending on the size of reads)...probably your company's stock price, too. And iostat or your local equivalent averages things, which means you lose outliers...and those outliers are what slow you down.

IOPS and bandwidth are good for capacity planning, but latency is a much better measure of performance.

And what's the best way of measuring latency? That's right, heatmaps. Coming from someone who worked on Fishworks, that's not surprising, but he made a good case. It was interesting to see how it's as much art as science...and given that he's exploiting the visual cortex to make things clear that never were, that's true in a few different ways.

This part of the presentation was so visual that it's best for you to go view the recording (and anyway, my notes from that part suck).

During the break, I talked with someone who had worked at Nortel before it imploded. Sign that things were going wrong: new execs come in (RUMs: Redundant Unisys Managers) and alla sudden everyone is on the chargeback model. Networks charges ops for bandwidth; ops charges networks for storage and monitoring; both are charged by backups for backups, and in turn are charged by them for bandwidth and storage and monitoring.

The guy I was talking to figured out a way around this, though. Backups had a penalty clause for non-performance that no one ever took advantage of, but he did: he requested things from backup and proved that the backups were corrupt. It got to the point where the backup department was paying his department every month. What a clusterfuck.

After that, a quick trip to the vendor area to grab stickers for the kids, then back to the presentations.

Next was the 2nd day of Practice and Experience Reports ("Lessons Learned"). First up was the network admin (?) for ARIN about IPv6 migration. This was interesting, particularly as I'd naively assumed that, hey, they're ARIN and would have no problems at all on this front...instead of realizing that they're out in front to take a bullet for ALL of us, man. Yeah. They had problems, they screwed up a couple times, and came out battered but intact. YEAH!

Interesting bits:

Routing is not as reliable, not least because for a long time (and perhaps still) admins were treating IPv6 as an experiment, something still in beta: there were times when whole countries in Europe would disappear oft the map for weeks as admins tried out different things.

Understanding ICMPv6 is a must. Naive assumptions brought over from IPv4 firewalls like "Hey, let's block all ICMP except ping" will break things in wonderfully subtle ways. Like: it's up to the client, not the router, to fragment packets. That means the client needs to discover the route MTU. That depends on ICMPv6.

Not all transit is equal; ask your vendor if they're using the same equipment to route both protocols, or if IPv6 is on the old, crappy stuff they were going to eBay. Ask if they're using tunnels; tunnels aren't bad in themselves, but can add multiple layers to things and make things trickier to debug. (This goes double if you've decided to firewall ICMPv6...)

They're very happy with OpenBSD's pf as an IPv6 firewall.

Dual-stack OSes make things easy, but can make policy complex. Be aware that DHCPv6 is not fully supported (and yes, you need it to hand out things like DNS and NTP), and some clients (believe he said XP) would not do DNS lookups over v6 -- only v4, though they'd happily go to v6 servers once they got the DNS records.

IPv6 security features are a double-edged sword: yes, you can set up encrypted VPNs, but so can botnets. Security vendors are behind on this; he's watching for neat tricks that'll allow you to figure out private keys for traffic and thus decrypt eg. botnet C&C, but it's not there yet. (My notes are fuzzy on this, so I may have it wrong.)

Multicast is an attack-and-discovery protocol, and he's a bit worried about possible return of reflection attacks (Smurfv6). He's hopeful that the many, many lessons learned since then mean it won't happen, but it is a whole new protocol nd set of stacks for baddies to explore and discover. (RFC 4942 was apparently important...)

Proxies are good for v4-only hosts: mod_proxy, squid and 6tunnel have worked well (6tunnel in particular).

Gotchas: reverse DNS can be painful, because v6 macros/generate statements don't work in BIND yet; IPv6 takes precedence in most cases, so watch for SSH barfing when it suddenly starts seeing new hosts.

Next up: Internet on the Edge, a good war story about bringing wireless through trees for DARPA that won best PER. Worth watching. (Later on, I happened across the person who presented and his boss in the elevator, and I congratulated him on his presentation. "See?" says his boss, and digs him in the ribs. "He didn't want to present it.")

Finally there was the report from one of the admins who helped set up Blue Gene 6, purchased from IBM. (The speaker was much younger than the others: skinny, pale guy with a black t-shirt that said GET YOUR WAR ON. "If anyone's got questions, I'm into that...") This report was extremely interesting to me, especially since I've got an upcoming purchase for a (much, much smaller) cluster coming up.

Blue Gene is a supercomputer with something like 10k nodes, and it uses 10GB/s Myrinet/Myricom (FIXME: Clarify which that is) cards/network for communication. Each node does source routing, and so latency is extremely low, throughput correspondingly high, and core routers correspondingly simple. To make this work, every card needs to have a map of the network so they know where to send stuff, and that map needs to be generated by a daemon that then distributes the map everywhere. Fine, right? Wrong:

The Myricom switch is admin'd by a web interface only: no CLI of any sort, no logging to syslog, nothing. Using this web interface becomes impractical when you've got thousands of nodes...

There's an inherent fragility in this design: a problem with a card means you need to turn off the whole node; a problem with the mapping daemon means things can get corrupt real quick.

And guess what? They had problems with the cards: a bad batch of transceivers meant that, over the 2-year life of the machine, they lost a full year's worth of computing. It took a long time to realize the problem, it took a long time to get the vendor to realize it, and it took longer to get it fixed (FIXME: Did he ever get it fixed?)

So, lessons learned:

Vendor relations should not start with a problem. If the first time you call them up is to say "Your stuff is breaking", you're doomed. Dealing with vendor problems calls for social skills first, and tech skills second. Get to know than just the sales team; get familiar with the tech team before you need them.

Know your systems inside and out before they break; part of their problem was not being as familiar with things as they should have been.

Have realistic expectations when someone says "We'll give you such a deal on this equipment!" That's why they went w/Myricom -- it was dirt cheap. They saved money on that, but it would have been better spent on hiring more people. (I realize that doesn't exactly make sense, but that's what's in my notes.)

Don't pay the vendor 'til it works. Do your acceptance testing, but be aware of subcontractor relations. In this case, IBM was providing Blue Gene but had subcontracted Myricom -- and already paid them. Oops, no leverage. (To be fair, he said that Myricom did help once they were convinced...but see the next point.)

Have an agreement in advance with your vendor about how much failure is too much. In their case, the failure rate was slow but steady, and Myricom kept saying "Oh, let's just let it shake out a little longer..." It took a lot of work to get them to agree to replace the cards.

Don't let vendors talk to each other through you. In their case, IBM would tell them something, and they'd have to pass that on to Myricom, and then the process would reverse. There were lots of details to keep track of, and no one had the whole picture. Setting up a weekly phone meeting with the vendors helped immensely.

Don't wait for the vendors to do your work. Don't assume that they'll troubleshoot something for you.

Don't buy stuff with a web-only interface. Make sure you can monitor things. (I'm looking at you, Dell C6500.)

Stay positive at all costs! This was a huge, long-running problem that impaired an expensive and important piece of equipment, and resisting pessimism was important. Celebrate victories locally; give positive feedback to the vendors; keep reminding everyone that you are making progress.

Question from me: How much of this advice depends on being involved in negotiations? Answer: maybe 50%; acceptance testing is a big part of it (and see previous comments about that) but vendor relations is the other part.

I was hoping to talk to the presenter afterward, but it didn't happen; there were a lot of other people who got to him first. :-) But what I heard (and heard again later from Victor) confirmed the low opinion of the Myrinet protocol/cards...man, there's nothing there to inspire confidence.

And after that came the talk by Adam Moskowitz on becoming a senior sysadmin. It was a list of (at times strongly) suggested skills -- hard, squishy, and soft -- that you'll need. Overarching all of it was the importance of knowing the business you're in and the people you're responsible to: why you're doing something ("it supports the business by making X, Y and Z easier" is the correct answer; "it's cool" is not) , explaining it to the boss and the boss' boss, respecting the people you work with and not looking down on them because they don't know computers. Worth watching.

That night, Victor, his sister and I drove up to San Francsisco to meet Noah and Sarah at the 21st Amendment brewpub. The drive took two hours (four accidents on the way), but it was worth it: good beer, good food, good friends, great time. Sadly I was not able to bring any back; the Noir et Blanc was awesome.

One good story to relate: there was an illustrator at the party who told us about (and showed pictures of) a coin she's designing for a client. They gave her the Three Wolves artwork to put on the coin. Yeah.

Footnotes:

-

Scary_viking_sysadmins

+10 LART of terror. (Quote from Matt.)

-

A-Side Wins

I raise my glass to the cut-and-dried, To the amplified I raise my glass to the b-side. -- Sloan, "A-Side Wins"Tuesday morning I got paged at 4:30am about /tmp filling up on a webserver at work, and I couldn't get back to sleep after that. I looked out my window at Venus, Saturn, Spica and Arcturus for a while, blogged & posted, then went out for coffee. It was cold -- around 4 or 5C. I walked past the Fairmont and wondered at the expensive cars in their front parking space; I'd noticed something fancy happening last night, and I've been meaning to look it up.

Two buses with suits pulled up in front of the Convention Centre; I thought maybe there was going to be a rumble, but they were here for the Medevice Conference that's in the other half of the Centre. (The Centre, by the way, is enormous. It's a little creepy to walk from one end to the other, in this enormous empty marble hall, followed by Kenny G the whole way.)

And then it was tutorial time: Cfengine 3 all day. I'd really been looking forward to this, and it was pretty darn good. (Note to myself: fill out the tutorial evaluation form.) Mark Burgess his own bad self was the instructor. His focus was on getting things done with Cfengine 3: start small and expand the scope as you learn more.

At times it dragged a little; there was a lot of time spent on niceties of syntax and the many, many particular things you can do with Cf3. (He spent three minutes talking about granularity of time measurement in Cf3.)

Thus, by the 3rd quarter of the day we were only halfway through his 100+ slides. But then he sped up by popular request, and this was probably the most valuable part for me: explaining some of the principles underlying the language itself. He cleared up a lot of things that I had not understood before, and I think I've got a much better idea of how to use it. (Good thing, too, since I'm giving a talk on Cf2 and Cf3 for a user group in December.)

During the break, I asked him about the Community Library. This is a collection of promises -- subroutines, basically -- that do high-level things like add packages, or comment-out sections of a file. When I started experimenting with Cf3, I followed the tutorials and noticed that there were a few times where the CL promises had changed (new names, different arguments, etc). I filed a bug and the documentation was fixed, but this worried me; I felt like libc's printf() had suddenly been renamed showstuff(). Was this going to happen all the time?

The answer was no: the CL is meant to be immutable; new features are appended, and don't replace old ones. In a very few cases, promises have been rewritten if they were badly implemented in the first place.

At lunch, I listened to some people in Federal labs talk about supercomputer/big cluster purchases. "I had a thirty-day burnin and found x, y and z wrong..." "You had 30 days? Man, we only have 14 days." "Well, this was 10 years ago..." I was surprised by this; why wouldn't you take a long time to verify that your expensive hardware actually worked?

User pressure is one part; they want it now. But the other part is management. They know that vendors hate long burn-in periods, because there's a bunch of expensive shiny that you haven't been paid for yet getting banged around. So management will use this as a bargaining chip in the bidding process: we'll cut down burn-in if you'll give us something else. It's frustrating for the sysadmins; you hope management knows what they're doing.

I talked with another sysadmin who was in the Cf3 class. He'd recently gone through the Cf2 -> Cf3 conversion; it took 6 months and was very, very hard. Cf3 is so radically different from Cf2 that it took a long time to wrap his head around how it/Mark Burgess thought. And then they'd come across bugs in documentation, or bugs in implementation, and that would hold things up.

In fact, version 3.1 has apparently just come out, fixing a bug that he'd tripped across: inserting a file into the middle of another file truncated that file. Cf3 would divide the first file in two (as requested), insert the bit you wanted, then throw away the second half rather than glom it back on. Whoops.

As a result, they're evaluating Puppet -- yes, even after 6 months of effort to port...in fact, because it took 6 months of effort to port. And because Puppet does hierarchical inheritance, whereas Cf3 only does sets and unions of sets. (Which MB says is much more flexible and simple: do Java class hierarchies really simplify anything?)

After all of that, it was time for supper. Matt and I met up with a few others and headed to The Loft, based on some random tweet I'd seen. There was a long talk about interviews, and I talked to one of the people about what it's like to work in a secret/secretive environment.

Secrecy is something I keep bumping up against at LISAs; there are military folks, government folks (and not just US), and folks from private companies that just don't talk a lot about what they do. I'm very curious about all of this, but I'm always reluctant to ask...I don't want to put anyone in an awkward spot. OTOH, they're probably used to it.

After that, back to the hotels to continue the conversation with the rapidly dwindling supplies of free beer, then off to the Fedora 14 BoF that I promised Beth Lynn I'd attend. It was interesting, particularly the mention of Fedora CSI ("Tonight on NBC!"), a set of CC-licensed system administration documentation. David Nalley introduced it by saying that,if you change jobs every few years like he does, you probably find yourself building the same damn documentation from scratch over and over again. Oh, and the Fedora project is looking for a sysadmin after burning through the first one. Interesting...

And then to bed. I'm not getting nearly as much sleep here as I should.

-

Nothing left to make me want to stay

Growing up was wall-to-wall excitement, but I don't recall Another who could understand at all... -- SloanMonday: day two of tutorials. I found Beth Lynn in the lobby and congratulated her on being very close to winning her bet; she's a great deal closer than I would have guessed. She convinced me to show up at the Fedora 14 BoF tomorrow.

First tutorial was "NASes for the Masses" with Lee Damon, which was all about how to do cheap NASes that are "mostly reliable" -- which can be damn good if your requirements are lower, or your budget smaller. You can build a multi-TB RAID array for about $8000 these days, which is not that bad at all. He figures these will top out at around 100 users...200-300 users and you want to spend the money on better stuff.

The tutorial was good, and a lot of it was stuff I'd have liked to know about five years ago when I had no budget. (Of course, the disk prices weren't nearly so good back then...) At the moment I've got a good-ish budget -- though, like Damon, Oracle's ending of their education discount has definitely cut off a preferred supplier -- so it's not immediately relevant for me.

QOTD:

Damon: People load up their file servers with too much. Why would you put MSSQL on your file server?

Me: NFS over SQL.

Matt: I think I'm going to be sick.

Damon also told us about his experience with Linux as an NFS server: two identical machines, two identical jobs run, but one ran with the data mounted from Linux and the other with the data mounted from FreeBSD. The FreeBSD server gave a 40% speed increase. "I will never use Linux as an NFS server again."

Oh, and a suggestion from the audience: smallnetbuilder.com for benchmarks and reviews of small NASes. Must check it out.

During the break I talked to someone from a movie studio who talked about the legal hurdles he had to jump in his work. F'r example: waiting eight weeks to get legal approval to host a local copy of a CSS file (with an open-source license) that added mouseover effects, as opposed to just referring to the source on its original host.

Or getting approval for showing 4 seconds of one of their movies in a presentation he made. Legal came back with questions: "How big will the screen be? How many people will be there? What quality will you be showing it at?" "It's a conference! There's going to be a big screen! Lots of people! Why?" "Oh, so it's not going to be 20 people huddled around a laptop? Why didn't you say so?" Copyright concerns? No: they wanted to make sure that the clip would be shown at a suitably high quality, showing off their film to the best effect. "I could get in a lot of trouble for showing a clip at YouTube quality," he said.

The afternoon was "Recovering from Linux Hard Drive Disasters" with Ted T'so, and this was pretty amazing. He covered a lot of material, starting with how filesystems worked and ending with deep juju using debugfs. If you ever get the chance to take this course, I highly recommend it. It is choice.

Bits:

ReiserFS: designed to be very, very good at handling lots of little files, because of Reiser's belief that the line between databases and filesystems should be erased (or at least a lot thinner than it is). "Thus, ReiserFS is the perfect filesystem if you want to store a Windows registry."

Fsck for ReiserFS works pretty well most of the time; it scans the partition looking for btree nodes (is that the right term?) (ReiserFS uses btrees throughout the filesytem) and then reconstructs the btree (ie, your filesystem) with whatever it finds. Where that falls down is if you've got VM images which themselves have ReiserFS filesystems...everything gets glommed together and it is a big, big mess.

BtrFS and ZFS both very cool, and nearly feature-identical though they take very different paths to get there. Both complex enough that you almost can't think of them as a filesystem, but need to think of them in software engineering terms.

ZFS was the cure for the "filesystems are done" syndrome. But it took many, many years of hard work to get it fast and stable. BtrFS is coming up from behind, and still struggles with slow reads and slowness in an aged FS.

Copy-on-write FS like ZFS and BtrFS struggle with aged filesystems and fragmentation; benchmarking should be done on aged FS to get an accurate idea of how it'll work for you.

Live demos with debugfs: Wow.

I got to ask him about fsync() O_PONIES; he basically said if you run bleeding edge distros on your laptop with closed-source graphics drivers, don't come crying to him when you lose data. (He said it much, much nicer than that.) This happens because ext4 assumes a stable system -- one that's not crashing every few minutes -- and so it can optimize for speed (which means, say, delaying sync()s for a bit). If you are running bleeding edge stuff, then you need to optimize for conservative approaches to data preservation and you lose speed. (That's an awkward sentence, I realize.)

I also got to ask him about RAID partitions for databases. At $WORK we've got a 3TB disk array that I made into one partition, slapped ext3 on, and put MySQL there. One of the things he mentioned during his tutorial made me wonder if that was necessary, so I asked him what the advantages/disadvantages were.