13 Mar 2013

Yesterday I was asked to restore a backup for a Windows desktop, and I

couldn't: I'd been backing up "Documents and Settings", not "Users".

The former is appropriate for XP, which this workstation'd had at some

point, but not Windows 7 which it had now. I'd missed the 286-byte

size of full backups. Luckily the user had another way to retrieve

his data. But I felt pretty sick for a while; still do.

When shit like this happens, I try to come up with a Nagios test to

watch for it. It's the regression test for sysadmins: is Nagios okay?

Then at least you aren't repeating any mistakes. But how the hell do

I test for this case? I'm not sure when the change happened, because

the full backups I had (going back three months; our usual policy)

were all 286 bytes. I thought I could settle for "alert me about full backups

under...oh, I dunno, 100KB." But a search for that in the catalog

turns up maybe ten or so, nine of them legitimate, meaning an alert

for this will give 90% false positives.

So all right, a list of exceptions. Except that needs to be

maintained. So imagine this sequence:

- A tiny filesystem is being backed up, and it's on the

don't-bug-me-if-it's-small list.

- It actually starts holding files, which are now backed up, so it's

probably important.

- But I don't update the don't-bug-me-if-it's-small list.

- Something goes wrong and the backups go back to being small.

- Someone requests the restore, and I can't provide it.

I need some way of saying "Oh, that's unusual..." Which makes me

think of statistics, which I don't understand very well, and I start

to think this is a bigger task than I realize and I'm maybe trying to

create AI in a Bash script.

And really, I've got don't-bug-me-if-this lists, and local checks

and exceptions, and I've documented things as well as I can but it's

never enough. I've tried hard to make things easy for my eventual

successor (I'm not switching jobs any time soon; just thinking of the

future), and if not easy then at least documented, but I have this

nagging feeling that she'll look at all this and just shake her head,

the way I've done at other setups. It feels like this baroque,

Balkanized, over-intricate set of kludges, special cases, homebrown

scripts littered with FIXMEs and I don't know what-all. I've got

Nagios invoking Bacula, and Cfengine managing some but not all, and it

just feels overgrown. Weedy. Some days I don't know the way out.

And the stupid part is that NONE OF THIS WOULD HAVE FIXED THE ORIGINAL

PROBLEM: I screwed up and did not adjust the files I was backing up

for a client. And that realization -- that after cycling through

all these dark worryings about how I'm doing my job, I'm right back

where I started, a gutkick suspicion that I shouldn't be allowed to do

what I do and I can't even begin to make a go at fixing things --

that is one hell of a way to end a day at work.

Tags:

sysadmin

backups

09 Mar 2013

All right, this was a frustrating night. It was clear and moonless

and lovely, but I spent much of my time looking for faint fuzzies and

not finding them. Which is probably not surprising since I'm in the

goram suburbs, but still. I prepared for this run by getting my maps

out in advance, and printing out sketches of stuff I was looking

for...but all for nought. NOUGHT, I say.

M33: Just for fun, before it sunk out of sight. No.

M1: No, 2x. First with the manual setting circles, and then another

attempt following an actual chart. The second attempt actually got me

in the right area, but I still couldn't see it. I realized that the

chart I'd printed out seemed to be about 5 degrees off in azimuth --

not good. Same thing happened with M50. (Pretty sure I aligned with Polaris this time...)

X Cancri: A carbon star. Found, hurrah! Very nice.

Castor: Resolved to double at 100X, and maybe to triple at 160X.

Interestingly, it looked like a sheaf of wheat at 160X. I wish I'd

collimated before heading out.

M66: I spent a long time tracking this down, and the best I

could get was maybe-possibly with averted vision. Maybe.

Other than a mandatory quick look at Jupiter, that was pretty much

it. Not much found, not much accomplished. It feels a bit likewhen I

was first starting out: can't find things, can't see them when I do. Grrr.

Tags:

astronomy

07 Mar 2013

The other day at $WORK, a user asked me why the jobs she was

submitting to the cluser were being deferred. They only needed one

core each, and showq showed lots free, so WTF?

By the time I checked on the state of these deferred jobs, the jobs

were already running -- and yeah, there were lots of cores free.

The checkjob command showed something interesting, though:

$ checkjob 34141 | grep Messages

Messages: cannot start job - RM failure, rc: 15041, msg: 'Execution server rejected request MSG=cannot send job to mom, state=PRERUN'

I thought this was from the node that the job was on now:

$ qstat -f 34141 | grep exec_host

exec_host = compute-3-5/19

but that was a red herring. (I could've also got the host from

"checkjob | grep -2 "Allocated Nodes".) Instead, grepping through

maui.log showed that it had been compute-1-11 that was the real

problem:

/opt/maui/log $ sudo grep 34141 maui.log.3 maui.log.2 maui.log.1 maui.log |grep -E 'WARN|ERROR'

maui.log.3:03/05 16:21:48 ERROR: job '34141' has NULL WCLimit field

maui.log.3:03/05 16:21:48 ERROR: job '34141' has NULL WCLimit field

maui.log.3:03/05 16:21:50 ERROR: job '34141' cannot be started: (rc: 15041 errmsg: 'Execution server rejected request MSG=cannot send job to mom, state=PRERUN' hostlist: 'compute-1-11')

maui.log.3:03/05 16:21:50 WARNING: cannot start job '34141' through resource manager

maui.log.3:03/05 16:21:50 ERROR: cannot start job '34141' in partition DEFAULT

maui.log.3:03/05 17:21:56 ERROR: job '34141' cannot be started: (rc: 15041 errmsg: 'Execution server rejected request MSG=cannot send job to mom, state=PRERUN' hostlist: 'compute-1-11')

There were lots of messages like this; I think the scheduler kept only

gave up on that node much later (hours).

checknode showed nothing wrong; in fact, it was running a job

currently and had 4 free cores:

$ checknode compute-1-11

checking node compute-1-11

State: Busy (in current state for 6:23:11:32)

Configured Resources: PROCS: 12 MEM: 47G SWAP: 46G DISK: 1M

Utilized Resources: PROCS: 8

Dedicated Resources: PROCS: 8

Opsys: linux Arch: [NONE]

Speed: 1.00 Load: 13.610

Network: [DEFAULT]

Features: [NONE]

Attributes: [Batch]

Classes: [default 0:12]

Total Time: INFINITY Up: INFINITY (98.74%) Active: INFINITY (18.08%)

Reservations:

Job '33849'(8) -6:23:12:03 -> 93:00:47:56 (99:23:59:59)

JobList: 33849

maui.log showed an alert:

maui.log.10:03/03 22:32:26 ALERT: RM state corruption. job '34001' has idle node 'compute-1-11' allocated (node forced to active state)

but that was another red herring; this is common and benign.

dmesg on compute-1-11 showed the problem:

compute-1-11 $ dmesg | tail

sd 0:0:0:0: SCSI error: return code = 0x08000002

sda: Current: sense key: Hardware Error

<<vendor>> ASC=0x80 ASCQ=0x87ASC=0x80 <<vendor>> ASCQ=0x87

Info fld=0x10489

end_request: I/O error, dev sda, sector 66697

Aborting journal on device sda1.

ext3_abort called.

EXT3-fs error (device sda1): ext3_journal_start_sb: Detected aborted journal

Remounting filesystem read-only

(Linux)|Wed Mar 06 09:37:20|[compute-1-11:~]$ mount

/dev/sda1 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/sda5 on /state/partition1 type ext3 (rw)

/dev/sda2 on /var type ext3 (rw)

tmpfs on /dev/shm type tmpfs (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

sophie:/export/scratch on /share/networkscratch type nfs (rw,addr=10.1.1.1)

mount: warning /etc/mtab is not writable (e.g. read-only filesystem).

It's possible that information reported by mount(8) is not

up to date. For actual information about system mount points

check the /proc/mounts file.

but this was also logged on the head node in /var/log/messages:

$ sudo grep compute-1-11.local /var/log/* |grep -vE 'automount|snmpd|qmgr|smtp|pam_unix|Accepted publickey' > ~/rt_1526/compute-1-11.syslog

/var/log/messages:Mar 6 00:26:22 compute-1-11.local pbs_mom: LOG_ERROR::Read-only file system (30) in job_purge, Unlink of job file failed

/var/log/messages:Mar 6 00:26:22 compute-1-11.local pbs_mom: LOG_ERROR::Read-only file system (30) in remtree, unlink failed on /opt/torque/mom_priv/jobs/34038.sophie.TK

/var/log/messages:Mar 6 00:26:22 compute-1-11.local pbs_mom: LOG_ERROR::Read-only file system (30) in job_purge, Unlink of job file failed

and in /var/log/kern:

$ sudo tail /var/log/kern

Mar 5 10:05:00 compute-1-11.local kernel: Aborting journal on device sda1.

Mar 5 10:05:01 compute-1-11.local kernel: ext3_abort called.

Mar 5 10:05:01 compute-1-11.local kernel: EXT3-fs error (device sda1): ext3_journal_start_sb: Detected aborted journal

Mar 5 10:05:01 compute-1-11.local kernel: Remounting filesystem read-only

Mar 7 05:18:06 compute-1-11.local kernel: Memory for crash kernel (0x0 to 0x0) notwithin permissible range

There are a few things I've learned from this:

- checkjob | grep Messages -- but there's no standard format for this.

- I really need to send alerts when /var/log/kern gets written to.

I've started to put some of these commands in a sub -- that's a

really awesome framework from 37 signals to collect commonly-used

commands together. In this case, I've named the sub "sophie", after

the cluster I work on (named in turn after the daughter of the PI).

You can find it on github or my own server (github is great,

but what happens when it goes away? ...but that's a rant for another

day.) Right now there are only a few things in there, and they're

somewhat specific to my environment, and doubtless they could be

improved -- but it's helping a lot so far.

Tags:

rocks

hpc

sysadmin

04 Mar 2013

This was a busy-ass day, yo. Got up at 5.30am to make beer, only to

find out that a server at work had gone down and its ILOM no longer

works. A few hours later, I've convinced everyone that a trip to UBC

would be lovely; I go in, reboot the server and we drive back. It's

no 6000 km from Calgary to California and back like my brother does,

but for us that's a long drive.

And then it's time to make beer, because I've left it mashing

overnight. Boil, chill, sanitize, pitch, lug, clean, and we're one

batch closer to 50 (50!). A call to my parents (oh yeah: Dad, you

guys can totally stay here in May) and then its supper. And then

it's time for astronomizing. Computers, beer and astronomy: this day

had it all.

So tonight's run was mostly about trying out the manual setting

circles. I don't have a tablet or smart phone to run something like

Stellarium on, so for now I'm printing out a spreadsheet with a

three-hour timeline, 15 minute intervals, of whatever Messiers are

above the horizon.

How did it work? Well, first I zeroed the azimuth on Kochab (Beta UM)

rather than Polaris, and kept wondering why the hell the azimuth was

off on everything. I realized my mistake, set things right, and tried

again. And...it worked well, when I could recognize things.

M42, for example, was easy. (It was the first thing I found by

dialing everything in, and when I took a look there was a satellite

crossing the FOV. Neat!) But then, it's big, easy to recognize, and

i've seen it before. Ditto M45. M1? Not so much; I haven't seen it

before, and I didn't have a map ready to look at. M35,

surprisingly, was hard to find; M34 was relatively easy, and M36

was found mainly because I knew what to look for in the finder.

This should not be surprising. I've been tracking down objects by

starhopping for a while now, so why I thought it would be easier now

that I could dial stuff in is beyond me. It's my first

time, and the positions were calculated for Vancouver, not New West

(though I'm curious how much diff that actually makes).

There were some other things I looked for, though.

M51: found the right location via starhopping, and confirmed on my

chart. But could I see it? Could I bollocks.

M50: Pretty sure I did see this; looking at sketches, they seem

pretty similar to what I saw. (And that's another thing: it really

does feel too easy, like I haven't earned it, and I can't be sure I've

really found it.) Oh, and saw Pakan 3, an asterism shaped like a

3/E/M/W, nearby.

M65/M66: Maybe M65; found the location via starhopping and

confirmed the position in my chart. Seems like I had the barest hint

of M65 visible.

At the request of my kids:

Jupiter: Three bands; not as steady as I thought it would be.

Pleidies: Very nice, but I do wis I had a wider field lens.

M36: Eli suggested a star cluster, aso I went with this. Lovely X

shape.

Betelgeuse: Nice colour. Almost forgot about this, and had to look

at it through trees before I went home.

Tags:

beer

sysadmin

astronomy

geekdad

20 Feb 2013

Clear night? I'm out. "Don't you work tomorrow?" ...Sorry, not sure I

understand you.

Took out the folding table I got at Costco for the first time, and it

worked pretty well. It was surprisingly easy to balance on the

handcart, and it was incredibly handy to have around. Not going back.

M41: Now that Sirius is higher above the horizon, this is doable.

Found it in binoculars, sketched it quickly through the scope before

it went behind a tree.

M43: Tried, and I'm pretty sure I got it. Not sure if it was the

moon or what, but this was definitely an averted-vision target.

Still, marking it done. That's two more Messiers.

Almach: Yeah, that is a pretty double. I like Delta Cephei better,

though.

NGC 2683, AKA the UFO galaxy: This took a long time to track down,

and I wasn't even sure I'd found it. But I sketched it, and comparing

that to other sketches (this one in particular -- and,

surprisingly, this photo) I can see that I did, um, see it,

hurrah! But only with difficulty and averted vision...no detail

whatsoever.

Jupter: Pretty as always.

Moon: Lovely views of the Montes Teneriffe, looking like chicken

claws, plus Helicon and Le Verrier craters. Helicon is 25km in

diameter, and that's kind of amazing: I can see something on another

world that is the size of my commute to work.

Tags:

astronomy

18 Feb 2013

Q from a user today that took two hours (not counting this entry) to

track down: why are my jobs idle when showq shows there are a lot of

free processors? (Background: we have a Rocks cluster, 39 nodes, 492

cores. Torque + Maui, pretty vanilla config.)

First off, showq did show a lot of free cores:

$ showq

[lots of jobs]

192 Active Jobs 426 of 492 Processors Active (86.59%)

38 of 38 Nodes Active (100.00%)

IDLE JOBS----------------------

JOBNAME USERNAME STATE PROC WCLIMIT QUEUETIME

32542 jdoe Idle 1 1:00:00:00 Fri Feb 15 13:55:55

32543 jdoe Idle 1 1:00:00:00 Fri Feb 15 13:55:55

32544 jdoe Idle 1 1:00:00:00 Fri Feb 15 13:55:56

Okay, so why? Let's take one of those jobs:

$ checkjob 32542

checking job 32542

State: Idle

Creds: user:jdoe group:example class:default qos:DEFAULT

WallTime: 00:00:00 of 1:00:00:00

SubmitTime: Fri Feb 15 13:55:55

(Time Queued Total: 2:22:55:26 Eligible: 2:22:55:26)

Total Tasks: 1

Req[0] TaskCount: 1 Partition: DEFAULT

Network: [NONE] Memory >= 0 Disk >= 0 Swap >= 0

Opsys: [NONE] Arch: [NONE] Features: [NONE]

IWD: [NONE] Executable: [NONE]

Bypass: 196 StartCount: 0

PartitionMask: [ALL]

Flags: HOSTLIST RESTARTABLE

HostList:

[compute-1-3:1]

Reservation '32542' (21:17:14 -> 1:21:17:14 Duration: 1:00:00:00)

PE: 1.00 StartPriority: 4255

job cannot run in partition DEFAULT (idle procs do not meet requirements : 0 of 1 procs found)

idle procs: 216 feasible procs: 0

Rejection Reasons: [State : 1][HostList : 38]

Note the bit that says:

job cannot run in partition DEFAULT (idle procs do not meet requirements : 0 of 1 procs found)

If we run "checkjob -v", we see some additional info (all the rest is the same):

Detailed Node Availability Information:

compute-2-1 rejected : HostList

compute-1-1 rejected : HostList

compute-3-2 rejected : HostList

compute-1-3 rejected : State

compute-1-4 rejected : HostList

compute-1-5 rejected : HostList

[and on it goes...]

This means that compute-1-3, one of the nodes we have, has been

assigned to the job. It's busy, so it'll get to the job Real Soon

Now. Problem solved!

Well, no. Because if you run something like this:

showq -u jdoe |awk '/Idle/ {print "checkjob -v " $1}' | sh

then a) you're probably in a state of sin, and b) you'll see that

there are a lot of jobs assigned to compute-1-3. WTF?

Well, this looks pretty close to what I'm seeing. And as it

turns out, the user in question submitted a lot of jobs (hundreds)

all at the same time. Ganglia lost track of all the nodes for a

while, so I assume that Torque did as well. (Haven't checked into

that yet...trying to get this down first; documenting stuff for Rocks

is always a problem for me.) The thread reply suggests qalter, but

that doesn't seem to work.

While I'm at it, here's a list of stuff that doesn't work:

- "qalter -l neednodes= " ; maui restart

- "runjob -c "; maui restart

- "runjob -c "; "releasehold " ; maui restart

(Oh, and btw turns out runjob is supposed to be replaced by

mjobctl, but mjobctl doesn't appear to work. True story.)

So at this point I'm stuck suggesting two things to the user:

- Don't submit umpty jobs at once

- Either wait for compute-1-3 to work through all your jobs, or cancel

and resubmit them.

God I hate HPC sometimes.

Helpful links that explained some of this:

Tags:

hpc

rocks

15 Feb 2013

I have a love-hate relationship with Bacula. It works, it's got

clients for Windows, and it uses a database for its catalog (a big

improvement over what I'd been used to, back in the day, from

Amanda...though that's probably changed since then). OTOH, it has had

an annoying set of bugs, the database can be a real bear to deal with,

and scheduling....oh, scheduling. I'm going to hold off on ranting on

scheduling. But you should know that in Bacula, you have to be

explicit:

Schedule {

Name = "WeeklyCycle"

Run = Level=Full Pool=Monthly 1st sat at 2:05

Run = Level=Differential Pool=Daily 2nd-5th sat at 2:05

Run = Level=Incremental IncrementalPool=Daily FullPool=Monthly 1st-5th mon-fri, 2nd-5th sun at 00:41

}

This leads to problems on the first Saturday of the month, when all

those full backups kick off. In the server room itself, where the

backup server (and tape library) are located, it's not too bad;

there's a GigE network, lots of bandwidth, and it's a dull roar, as

you may say. But I also back up clients on a couple of other

networks on campus -- one of which is 100 Mbit. Backing up 12 x 500GB

home partitions on a remote 100 MBit network means a) no one on that

network can connect to their servers anymore, and b) everything takes

days to complete, making it entirely likely that something will fail

in that time and you've just lost your backup.

One way to do that is to adjust the schedule. Maybe you say that you

only want to do full backups every two months, and to not do

everything on the same Saturday. That leads to crap like this:

Schedule {

Name = "TwoMonthExpiryWeeklyCycleWednesdayFull"

Run = Level=Full Pool=MonthlyTwoMonthExpiry 2nd Wed jan,mar,may,jun,sep,nov at 20:41

Run = Level=Differential Pool=Daily 2nd-5th sat at 2:05

Run = Level=Differential Pool=Daily 1st sat feb,apr,jun,aug,oct,dec at 2:05

Run = Level=Incremental IncrementalPool=Daily FullPool=MonthlyTwoMonthExpiry 1st-5th mon-tue,thu-fri,sun, 2nd-5th wed at 20:41

Run = Level=Incremental IncrementalPool=Daily FullPool=MonthlyTwoMonthExpiry 2nd Wed jan,mar,may,jun,sep,nov at 20:41

}

That is awful; it's difficult to make sure you've caught everything,

and you have to do something like this for Thursday, Friday,

Tuesday...

I guess I did rant about Bacula scheduling after all.

A while back I realized that what I really wanted was a queue: a

list of jobs for the slow network that would get done one at a time.

I looked around, and found Perl's IPC::DirQueue. It's a pretty

simple module that uses directories and files to manage queues, and

it's safe over NFS. It seemed a good place to start.

So here's what I've got so far: there's an IPC::Dirqueue-managed queue

that has a list of jobs like this:

- desktop-01-home

- desktop-01-etc

- desktop-01-var

- desktop-02-home

- (etc, etc)

I've got a simple Perl script that, using IPC::DirQueue, take the

first job and run it like so:

open (BCONSOLE, "| /usr/bin/bconsole");

print BCONSOLE "run job=" . $job . " level=Full pool=Monthly yes";

close (BCONSOLE);

I've set up a separate job definition for the 100Mbit-network clients:

JobDefs {

Name = "100MbitNetworkJob

Type = Backup

Client = agnatha-fd

Level = Incremental

Schedule = "WeeklyCycleNoFull"

Storage = tape

Messages = Standard

Priority = 10

SpoolData = yes

Pool = Daily

Cancel Lower Level Duplicates = yes

Cancel Queued Duplicates = yes

RunScript {

RunsWhen = After

Runs On Client = No

Command = "/path/to/job_queue/bacula_queue_mgr -c %l -r"

"WeeklyCycleNoFull" is just what it sounds like: daily incrementals,

weekly diffs, but no fulls; those are taken care of by the queue. The

RunScript stanza is the interesting part: it runs baculaqueuemgr (my

Perl script) after each job has completed. It includes the level of

the job that just finished (Incremental, Differential or Full), and

the "-r" argument to run a job.

The Perl script in question will only run a job if the one that just

finished was a Full level. This was meant to be a crappy^Wsimple way

of ensuring that we run Fulls one at a time -- no triggering a Full if

an Incremental has just finished, since I might well be running a

bunch of Incrementals at once.

It's not yet entirely working. It works well enough if I run the

queue manually (which is actually tolerable compared to what I had

before), but Bacula running the "baculaqueuemgr" command does nto

quite work. The queue module has a built-in assumption about job

lifetimes, and while I can tweak it to be something like days (instead

of the default, which I think is 15 minutes), the script still notes

that it's removing a lot of stale lockfiles, and there's nothing left

to run because they're all old jobs. I'm still working on this, and I

may end up switching to some other queue module. (Any suggestions,

let me know; pretty much open to any scripting language.)

A future feature will be getting these jobs queued up automagically by

Nagios. I point Nagios at Bacula to make sure that jobs get run often

enough, and it should be possible to have Nagios' event handler

enqueue a job when it figure's it's overdue.

For now, though, I'm glad this has worked out as well as it has. I

still feel guilty about trying to duplicate Amanda's scheduling

features, but I feel a bit like Macbeth: in blood stepped in so

far....So I keep going. For now.

Tags:

backups

bacula

13 Feb 2013

(Yep, that's more than a month since the last time...)

Tonight was a rare clear night. Just before putting the kids to bed,

I stepped outside to see if it was clear -- and saw the ISS heading

over! Talk about good timing...I ran back inside and got my youngest

son to come out so we could wave at @Cmdr_Hadfield. Sadly,

doesn't seem like he saw us.

I was expecting the clouds to roll in, but they didn't. I was

dithering about whether to go out, and my wife said "Why don't you

just go? It'll make you happy." Now that's a) good advice and b) a

wonderful partner. I'm lucky.

So out, scope not even cooled, and did I care? Did I bollocks. It was

wonderful to be out, and the clouds really did hold off a long time.

M81 and M82 -- Holy crap, I found them again. It's been a while

since I saw them, and it was wonderfully encouraging to know I could

track them down.

Moon -- quick look, as it was setting and being covered by clouds.

Fun fact: while walking home from work, I was surprised at how small

the moon was. Then I remembered that's just its regular size, and

it's been a while since I saw it.

Polaris -- the engagement ring. Pretty. Fits into a 40mm

eyepiece view ('bout a degree FOV).

M34 -- First time, which means I've got one more Messier bagged.

What a pretty cluster! Took the time to sketch it. Looks like a

triskelion to me. (UPDATE: Whoops, actually found it last

September. Dangit.)

M42 -- Beautiful, beautiful. I've been looking at sketches of it

recently, and that helped me notice more detail, like the fish-mouth shape

and the bat wings. But I think I was not looking in the right place

for M43. Next time. (BTW, this is just a lovely sketch.) I

think I saw the E star, which is nice.

Brief attempt to find M1, but by that time the clouds were rolling

in. What a lovely, satisfying night.

Tags:

astronomy

geekdad

01 Feb 2013

I can't remember where I came across it, but this Bash Pitfalls

page is awesome.

Tags:

bash

programming

25 Jan 2013

Some time between mid-December and January at $WORK, we noticed that

FTP transfers from the NIH NCBI were nearly always failing; maybe

one attempt in 15 or so would work. I got it down to this test case:

wget ftp://ftp.ncbi.nlm.nih.gov/geo/series/GSE34nnn/GSE34777/matrix/GSE34777_series_matrix.txt.gz

The failed downloads would not fail right away, but instead hung when

the data connection from the remote end should have transferred the

file to us. Twiddling passive did nothing. If I tried HTTP instead

of FTP, I'd get about 16k and then the transfer would hang.

That hostname, ftp.ncbi.nlm.nih.gov, resolves to 4-6 different IP

addresses, with a TTL of 30 seconds. I found that ftp transfers

from these IP addresses failed:

- 130.14.250.10

- 130.14.250.11

- 130.14.250.12

but this one worked:

The A record you get doesn't seem to have a pattern, so I presume it's

being handled by a load balancer rather than simple round-robin. It

didn't come up very often, which I think accounts for the low rate of

success.

At first I thought this might indicate network problems here at $WORK,

but the folks I contacted insisted nothing has changed, we're not

behind any additional firewalls, and all our packets take the same

route to both sets of addresses. So I checked our firewall, and

couldn't find anything there -- no blocked packets, and to the best of

my knowledge no changed settings. Weirdly, running the wget command

on the firewall itself (which runs OpenBSD, instead of CentOS Linux

like our servers) worked...that was an interesting rabbit hole. But

if I deked out the firewall entirely and put a server outside, it

still failed.

Then I tripped over the fix: lowering the MTU from our usual 9000

bytes to 8500 bytes made the transfers work successfully. (Yes, 8500

and no more; 8501 fails, 8500 or below works.) And what has an MTU of

8500 bytes? Cisco Firewall Service Modules, which are in use

here at $WORK -- though not (I thought) on our network. I contacted

the network folks again, they double-checked, and said no, we're not

suddenly behind an FSM. And in fact, their MTU is 8500 nearly

everywhere...which probably didn't happen overnight.

Changing the MTU here was an imposing thought; I'd have to change

it everywhere, at once, and test with reboots...Bleah. Instead, I

decided to try TCP MSS clamping instead with this iptables

rule:

iptables -A OUTPUT -p tcp -d 130.14.250.0/24 --tcp-flags SYN,RST SYN -j TCPMSS --set-mss 8460

(Again, 8460 or below works; 8461 or above works fine.) It's a hack,

but it works. I'm going to contact the NCBI folks and ask if

anything's changed at their end.

Tags:

network

sysadmin

jumboframes

21 Jan 2013

Two things I keep forgetting:

- If you've got an account with a shell like "/sbin/nologin", and you

want to run su but you keep getting "This account is currently not

available", you can run su like so:

su -s /bin/bash -c [command] [userid]

echo 3 > /proc/sys/vm/drop_caches

Thanks to the good folks at Server Fault/Stack Overflow.

Tags:

13 Jan 2013

I took the kids out this morning to look at Saturn through the

telescope. It was a rare clear day; the clouds of the last three

months seem to have taken a break. And it was cold -- probably -5C

out there.

First, though, we had a visit from Mustard Boy! He uses a

frisbee for a weapon.

Arlo took this picture through the viewfinder, and it turned out

surprisingly well. Saturn is just visible above (well, below) the

rooftops. It wasn't the best location for viewing, but it was nice

and close.

This, by constrast, is the crappiest afocal picture ever.

But on to the kids! The tempation to look down the tube of the

telescope is nigh-inescapable.

Notice the stickers on the telescope. Some of those I added, but

approximately 6 x 10^8 were added yesterday when the kids were bored.

Eli is small enough that I have to lift him up to see through the eyepiece.

Saturn was small -- 100x doesn't show a very big image -- but we had

fun, didn't get cold and no one got their toungue stuck to the

telescope. I declare that success.

Tags:

astronomy

geekdad

12 Jan 2013

First observing run of 2013! It was absolutely, stunningly clear last

night...but that was the RASC lecture (exoplanets w/the MOST

telescope; interesting, but I already knew much of the talk). Tonight

it was hazy in the north, and it slowly stretched to the south -- but

that took a while, and I had a couple hours anyhow. Which was good,

because ZOMG it was cold.

Started out with Jupiter, 'cos how can you avoid Jupiter when it's

up there so big and bright? The GRS was obvious, which was nice -- I

picked it out without knowing that it was going to be visible.

M35: noticed a ragged curve of maybe 14 stars (suns!) anchored

by two bright stars at the end...kinda looks like a frown. Maybe

able to see colours (left: blue; right: pale yellow). I thought I'd

found NGC 2158, but after comparing it with some sketches I

think I was wrong.

NGC 2392, the Clown Face Nebula/Eskimo Nebula. I cannot say that it

was terribly impressive, even with an O3 filter. (Did I mention that

I bought an O3 filter? 'Cos I did.)

M31: Only mentioned in the spirit of completeness.

M42 with the O3 filter is pretty impressive.

M47: I was unsure if I'd found this, so I made a quick sketch. When

I got home, I was able to verify that I really had it. Good to find

it, but man, not much to look at. Close to the horizon, and there may

have been some haze in there too.

After that, I headed in; my feet were freezing. Thought about

improvements for next time (don't use a metal clipboard, get a clip-on

flashlight, buy a folding table) while packing up, and came home to

wine, my wife and the fire. Nice.

Tags:

astronomy

07 Jan 2013

For a while I've been looking for a gallery to host at home --

something we could use to share pix with family and the like. I've

looked at Gallery and toyed with MediaGoblin (among others), but

they've all been missing the functions I really wanted: scriptability

and mas uploads. I figured I wanted a static gallery generator, but I

couldn't seem to find any.

A few days ago, though, I came across Lazygal, and it's

perfect. Simple commands, nice look, and best of all it's easy to

combine it with Git to get mass uploads. Still playing with

post-update hooks, but it shouldn't be too hard to get this working.

Next up: teach my wife and kids git; only half-joking.

Tags:

04 Jan 2013

I found out before Xmas that my request for an office had been

approved. We had some empty ones hanging around, and my boss

encouraged me to ask for one. Yesterday I worked in it for the first

time; today I started moving in earnest, and moved my workstation

over.

And my god, is it ever cool. The office itself is small but nice --

lots of desk space, a bookshelf, and windows (OMG natural light). But

holy crap, was it ever wonderful to sit in there, alone,

uninterrupted, and work. Just work. Like, all the stuff I want to

do? I was able to sit down, plan out my year, and figure out that in

the grand scheme of things it's not too much. (The problem is wanting

to do everything right away.) And today I worked on getting our

verdammt printing accounting exposed to Windows users, setting up

Samba for the first time in eons and even getting it to talk to LDAP.

Not only that -- not only that, I say, but when I had interruptions --

when people came to me with questions -- it was fine. I didn't feel

angry, or lost, or helpless. I helped them as best I could, and moved

on. And got more shit done in a day than I've done in a week.

I'm going to miss hanging out with the people in the cubicle I was

in. Yes, they're only ten metres away, but there's a world of

difference between having people there and having to walk over to

see them. I'm not terribly outgoing, and it's just in the last six

months or so that I've really come to enjoy all the people around me.

They're fun, and it's nice to be able to talk to them. (There's even

a programmer who homebrews, for a wonderful mixture of tech and beer

talk.) But oh my sweet darling door made of steel and everything, I

love this place.

Tags:

work

sysadmin

03 Jan 2013

First day back at $WORK after the winter break yesterday, and

some...interesting...things. Like finding out about the service

that didn't come back after a power outage three weeks ago. Fuck.

Add the check to Nagios, bring it up; when the light turns green, the

trap is clean.

Or when I got a page about a service that I recognized as having,

somehow, to do with a webapp we monitor, but no real recollection of

what it does or why it's important. Go talk to my boss, find out he's

restarted it and it'll be up in a minute, get the 25-word version of

what it does, add him to the contact list for that service and add the

info to documentation.

I start to think about how to include a link to documentation in

Nagios alerts, and a quick search turns up "Default monitoring alerts

are awful" , a blog post by Jeff Goldschrafe about just this. His

approach looks damned cool, and I'm hoping he'll share how he does

this. Inna meantime, there's the Nagios config options "notes",

"notesurl" and "actionurl", which I didn't know about. I'll start

adding stuff to the Nagios config. (Which really makes me wish I had

a way of generating Nagios config...sigh. Maybe NConf?)

But also on Jeff's blog I found a post about Kaboli, which lets

you interact with Nagios/Icinga through email. That's cool. Repo

here.

Planning. I want to do something better with planning. I've got RT

to catch problems as they emerge, and track them to completion.

Combined with orgmode, it's pretty good at giving me a handy reference

for what I'm working on (RT #666) and having the whole history

available. What it's not good at is big-picture

planning...everything is just a big list of stuff to do, not sorted

by priority or labelled by project, and it's a big intimidating mess.

I heard about Kanban when I was at LISA this year, and I want to

give it a try...not suure if it's exactly right, but it seems close.

And then I came across Behaviour-driven infrastructure through

Cucumber, a blog post from Lindsay Holmwood. Which is damn cool,

and about which I'll write more another time. Which led to the

Github repo for a cucumber/nagios plugin, and reading more about

Cucumber, and behaviour-driven development versus test-driven

development (hint: they're almost exactly the same thing).

My god, it's full of stars.

Tags:

sysadmin

nagios

cfengine

testing

documentation

programming

lisa

01 Jan 2013

I'm still digesting all the stuff that came out of LISA this

year. But there are a number of things I want to try out:

I learned a little bit about agile development, mainly from Geoff

Halprin's training material and keynote, and it seemed

interesting. One of the things that resonated with me was the idea

of only having a small number of work stages for stuff: in the

queue, next, working, and done. (Going without the info here, so

quite possibly wrong.) I like that: work on stuff in two-week

chunks, commit to that and get it done. That seems much more

manageable than having stuff in the queue with no real idea of a

schedule. And a two-week chunk is at least a good place to start:

interruptions aren't about to go away any time soon, and I can

adjust this as necessary.

A corollary is that it's probably not best to plan more than two

such things in a month. I'm thinking about things like switching

from Nagios to Icinga, setting up Ganeti, and such: more than I can

do in an hour, less than a semester's work.

I really want to work on eliminating pain points this year.

Icinga's one; Nagios' web interface is painful. (I'd also like to

look at Sensu.) I want to make backups better. I want to add

proper testing for Cfengine with Vagrant and Git, so I can go on more

than a wing and a prayer when pushing changes.

I also need to work more closely with the faculty in my department.

Part of that is committing to more manageable work, and part of that

is just following through more. Part of it, though, is working with

people that intimidate me, and letting them know what I can do for

them.

I need to manage my time better, and I think a big part of that is

interruptions. I've just been told I'm getting an office, which is

a mixed blessing. There's a certain amount of flux in the office,

and I've been making friends with the people around me lately. I'll

miss them/that, but I think the ability to retreat and work on

something is going to be valuable.

Another part of managing time is, I think/hope, a better routine.

Like: one hour every day for long-term project work. (The office

makes this easier to imagine.) Set times for the things I want to

get done at home (where my free time comes in one-hour chunks).

Deciding if I want to work on transit (I can take my laptop home

with me, and it's a 90 minute commute), and how (fun projects? stuff

I can't get done at work? blue-sky stuff?). If, because a. my eyes

will bug out if I stare at a screen all day and b. I firmly intend

to keep a limit on my work time. So it'd probably be a couple days

a week, to allow time for all the podcasts and books I want to

inhale.

Microboxing for productivity. Interesting stuff.

Kanban. Related to Agile, but I forgot about it. Pomodoro + Emacs + Orgmode, too.

Probably more as I think of it...but right now it's time to sleep.

5.30am comes awful early after 11 days off...

Tags:

sysadmin

lisa

01 Jan 2013

Continuing our long tradition of being old, my wife and I barely made

it to 9.48pm last night before falling asleep. But we made up for it

this morning, when we took the kids on a walk to find a graveyard.

Okay, so there's one nearby, but it sounds exciting (especially when

the kids don't realize that "graveyard" is the same as "cemetery", and

thus the same boring thing you drive by all the time). But it got

even better: the fog was thick as pea soup, and we found THE RAVINE.

I mean, look at these pictures:

Clara kept telling the boys that the popotch would get them if they

wandered off the trail. (Popotch == semi-made-up Italian for witch)

I was quite scared of the popotch.

So it turned out we were in the Glenbrook Ravine Park:

We've been in New West six years now and never once knew it was

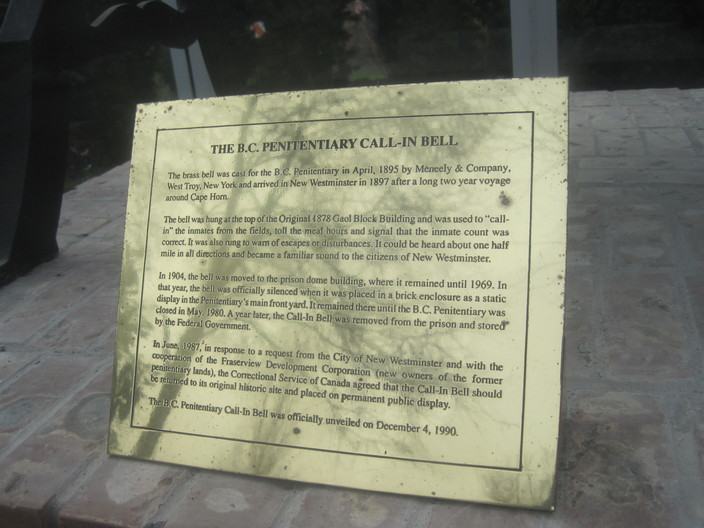

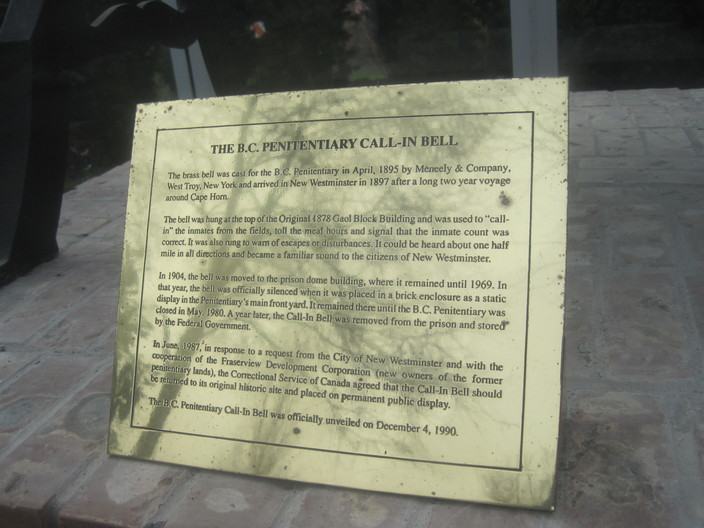

there. Not only that, but there's a 118 year-old bell:

And it's from an old prison...

which is just up the street:

After this we had to walk up an enormous hill, whipping the children

the whole way. And it was then that we finally got to the

graveyard, but they were too tired to do more than groan at how much

further they had to walk to get home. I mean, not even the Lacrosse

Hall of Fame could get them excited.

And finally, we done had a battery splosion:

This came from a toy the boys got for Xmas. The battery ran out

(quite normally, I should add), and I had taken it out of the toy so

we could replace it. I wrapped it in an old copy of my resume I'd

been working on, tucked it into my pocket in preparation for a trip to

the dollar store, then promptly forgot about it. 24 hours later I was

sitting around minding my own business when POP! I had no idea what

the hell'd just happened, but when I dug around in my pocket I found

the paper and unwrapped it. The cap popped right off; the bit on the

left was something like sponge or wool, and was quite hot.

I think the ink is conductive; my wife thinks maybe the battery got

too hot just sitting around. At some point I'll conduct an

experiment.

Tags:

geekdad

30 Dec 2012

Once again it has been a goddamned long time since I got out with

the scope. The skies here have been cloudy for months, it seems, with

very, very few breaks. Tonight was one of them, and I was itching to

try out the new O3 filter I'd bought from the good folks at Vancouver

Telescopes...went in looking for finderscope caps and came out

with the caps and a new filter. (These folks are awesome, btw. They

always have time to chat, and I've never been to a friendlier

store. When I finally get the cash together to buy that 8" Celestron,

I'm damn sure going there.)

We were over at my in-laws today, and as it happened I'd taken over

the Galileoscope, attached to a photo tripod. It's not the most

stable mount, but it does the trick. We set it up in their back yard

and looked at Jupiter. I've got an old Kellner eyepiece that gives

28X, so we could see the two equatorial belts and, with careful

squinting, all four moons. It was the first time my in-laws had seen

Jupiter through a scope, and I think they enjoyed it.

The clouds held off while we drove home and put the kids to bed, and I

headed out to the local park. The clouds were starting to move in, so

I started looking in a hurry.

Jupiter: The seeing seemed quite steady tonight, and I was able to

see a fair bit of detail. The GRS was transiting while I was there,

which was neat. It was fairly easy to see (now that I know what I'm

looking for). There was a long, trailing streamer (not sure that's

the right term) coming off the GRS, and I swear I could see it was

blue at times. (You can see a really great picture of it here;

that guy's photos are simply amazing.)

M42: Viewed in a hurry, as I was afraid the clouds were rolling in.

I used this as a chance to try out the O3 filter, and I'm definitely

intrigued. I'd write more, but I really was in a hurry and didn't

savour this at all.

M37 and M36: I have always had a hard time finding these; in fact,

it was my second winter observing before I could find them. Now, I'm

happy to know I can repeat the feat. The clouds rolled in bbefore I

could find M38.

IC 405 (The Flaming Star Nebula): While looking at the star atlas I

noticed this was in the neighbourhood. I found the star, and tried

looking at it with the O3 filter, but could not see anything. Sue

French says in "Deep Sky Wonders" that it responds well to

hydrogen-beta filters, "but a narrowband filter can also be of help."

Not for me, but again I was in a hurry.

Luna: Ah, Luna. The mountains of Mare Crisium, and Picard just

going into shadow; Macrobius; Hercules and Atlas. The O3 filter made

a fine moon filter. :-)

A short and hurried session, but fun nonetheless.

Tags:

astronomy

geekdad

28 Dec 2012

It's Xmas vacation, and that means it's time to brew. Mash was at 70

C, which was a nice even 5 C drop in the strike water temp. 7.5

gallons went in, and 6 gallons of wort came out. It was not raining

out, despite the title, so I brewed outside:

My kids came out to watch; the youngest stayed to help.

The keggle was converted by my father-in-law, a retired millwright; he

wrote the year (2009) and his initial using an angle grinder.

The gravity was 1.050, so I got decent efficiency for a change -- not

like last time.

On a whim, Eli decided to make the 60 minute hop addition a FWH instead:

Ah, the aluminum dipstick. No homebrewer should be without one.

Eli demonstrated his command of Le Parkour...

and The Slide:

"Hey, it's Old Man Brown, sittin' on his porch eatin' soup an' making

moonshine again!"

Eventually it was time to pitch the yeast. We took turns. I took

this one of Eli...

...and he took this one of me:

Isn't it beautiful? Oh, and the OG was 1.062.

Tags:

beer

geekdad